Rendering with Clusters

Rendering > Cluster

Toggles the connection to the cluster. Use cluster rendering to speed up the rendering time by sharing the rendering process between multiple computers. Internally, the Scene Graph is transferred over the network to each computer, and then split into tiles for rendering. The result is sent back to the host, where the final image is composed.

To configure a cluster, you must know the IP address or name of each host you intend to cluster. Each host must have a cluster service or version of VRED Professional installed.

To avoid checking out unneeded render node licenses, you can disable individual hosts from the cluster service.

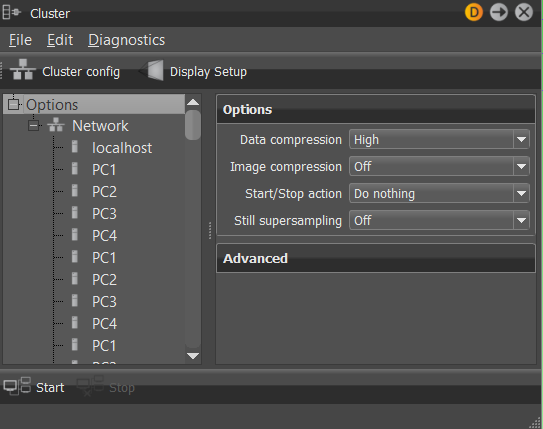

Head and rendering nodes are connected through a network. The configuration is presented as a tree view with the global options, network options, and options for each rendering node.

Clustering for Texture-based Lightmaps

VRED supports GPU and CPU clustering for texture-based lightmaps; however, keep the following in mind:

- The cluster must be started before users can distribute the calculation of lightmaps on a cluster.

- When calculating lightmaps with the GPU and the

localhostis part of the cluster setup, ensure the graphic card on thelocalhostmaster has enough memory, as the master has to hold the scene twice. - When calculating lightmaps with the CPU and the

localhostis part of the cluster setup, ensure you have enough RAM.

Use the VRED Cluster to distribute the calculation of lightmaps and lightmap UV generation on multiple machines.

It is not possible to use GPU Raytracing or VRED Cluster to calculate vertex-based shadows.

How to Configure a Cluster

-

Choose Rendering > Cluster to open the Cluster module.

-

In the left panel, select Options and choose Edit > Add network.

-

Do any of the following:

-

To add rendering nodes individually, choose Edit > Add compute node and provide the Name under Compute node in the right panel.

-

To add many nodes at once, choose Edit > Cluster config to open the Cluster config dialog box.

In the Configuration text box, add the configuration. A wide range of formats is recognized, including the connection string from the Cluster module in previous VRED releases.

PC1 PC2 PC3 PC4 PC1, PC2, PC3, PC4 PC1 - PC4 Master1 {PC1 PC2 PC3} Master1 [forwards=PC1|PC2|PC3] Master1 {C1PC01 - C1PC50} Master2 {C2PC00 - C2PC50}You can also read the configuration from an externally generated file. For example, a startup script could generate a file that contains the names of all usable cluster nodes. If the Read configuration from file field contains a valid file name, then the configuration is read each time the Start button is clicked.

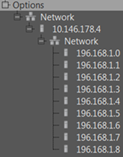

Note:If the cluster nodes are in a local network that is not accessible from the host where VRED is running, then you must configure an intermediate node. This node must be connected to the same network as the host where VRED is running and through a second network card to the cluster network. In this setup, you have to configure two networks. The first one is the network that connects VRED with the gateway note. The second is the network that connects the cluster nodes.

-

-

Set cluster, network, and render node options in the right panel of the dialog.

-

To display cluster options, select Options in the left panel.

-

To display network options, select the Network node in the left panel.

-

To display render node options, select the node in the left panel.

-

How to Import Legacy Cluster Configurations

Since VRED 2016 SR1, the raytracing cluster configuration, and since VRED 2017, the display cluster configuration has changed. You can import the old configuration and convert it to the new format.

In the Cluster dialog box, choose File > Import Legacy.

How to Use the Same Cluster Node Multiple Times

You can connect to the same cluster node from multiple instances of VRED. However, this can cause problems, as the available resources must be shared between multiple VREDClusterServer instances.

For example, one problem could be bad performance, due to unpredictable load balancing results, or CPU usage by the other VREDClusterServer instance. When a second user tries to connect to the same cluster node, the following message appears:

There is already a VREDClusterServer running on one or more of the requested cluster nodes. Do you really want to start clustering and risk performance problems?

Another problem is the available memory. If large models are used, then it could be impossible to load multiple models into the memory of the cluster node. VRED tries to estimate how much memory is required. If the requested memory is not available, the following message appears:

There is already a VREDClusterServer running on one or more of the requested cluster nodes and there is likely not enough free memory. Do you really want to start clustering and risk server instability?

If VRED is forced to start the cluster server, then unpredictable crashes could happen on the server.

Disable a Host From the Cluster Service

You can disable individual hosts from cluster rendering. The disabled host does not check out a render node license.

- In the Menu Bar, choose Rendering > Render Settings to open the Render Settings dialog box.

- In the Render Settings dialog box, scroll down to the Cluster section, select Enable Cluster, and enter the host names.

- Add

[RT=0]after a host to disable it.

Set up a Display Cluster

In a display cluster setup, the scene can be viewed on a number of hosts connected over a network. For a fully functional display cluster setup, the following information must be configured:

-

Network setup - All display nodes and optionally additional nodes for raytracing speedup must be configured in the Cluster dialog box.

-

Projection setup - You must define what should be visible on remote displays. For example, displays can be arranged as a tiled wall or as a cave.

-

Display setup - You must define which part of the projection should be visible on which display. The exact location of the viewport can be defined. Passive or active settings are selectable.

-

In the Menu Bar, choose Rendering > Cluster to open the Cluster dialog box.

-

Set up display nodes and any additional nodes for raytracing.

-

Click Display Setup to open the Display configuration dialog box.

-

Set up projections.

Multiple projection types are supported. You can define projections for tiles walls, caves, or arbitrary projection planes. You can define multiple projection types for multiple displays. For example, VRED can handle a tiled display parallel to a cave projection.

Projection types, like Tiled Wall or Cave, generate child projections for each single display. Child projections are read-only. If the generated projections must be changed to fit the real-world situations, right-click on them and choose Convert to planes to convert them to plane projections. Then, you can edit each plane individually.

-

Set up displays.

If the projection is defined, then each projection must be mapped to a window on one of the cluster nodes. All windows belonging to the same display are grouped together into a display node.

Displays, Windows, and Viewports can be copied and pasted, using the right-click menu. This simplifies the definition if, for example, the whole display is composed of similar windows.

-

Enable Hardware Sync

VRED supports swap sync between multiple cluster nodes. Sync hardware, like the NVidia Quadro Sync, must be installed, properly configured, and connected. If all these preconditions are available, then the Hardware Sync property of the display properties can be enabled. All the connected displays should do the buffer swap synchronized.

Network Requirements

The network hardware is the limiting factor for the maximum reachable frame rate and the speed with which the Scene Graph can be distributed to the rendering nodes in a cluster. We have measured frame rates and data transfer rates on a 32-node cluster with different network configurations. We used a very simple scene with CPU rasterization to generate the images to ensure the rendering speed is not CPU bound. All measurements were taken in a dedicated network without other traffic and well configured network components.

The data transfer rate is always limited by the slowest network link in the cluster. Infiniband can in theory transfer up to 30GBit/s, but the 10GBit link from the host makes it impossible to reach this speed. Currently, we have no experience with large clusters connected by 10GB Ethernet. The 10GB>Infiniband configuration has been tested with up to 300 nodes.

| Network | uncompressed (MB/s) | medium (MB/s) | high (MB/s) |

|---|---|---|---|

| 10GB->Infiniband | 990 | 1100 | 910 |

| 10GB->1GB | 110 | 215 | 280 |

| 1GB | 110 | 215 | 280 |

In contrast to the transfer rate of the Scene Graph, the frame rate is, in some cases, not limited by the slowest connection. If the rendering nodes are connected to a 1GBit Switch with a 10GBit uplink to the host, then 10 renderings nodes can, in sum, utilize the whole 10GBit uplink. As for large clusters, the influence of network latency becomes more important. Infiniband should be the preferred cluster network.

| Resolution | Network | Uncompressed (fps) | lossless (fps) | medium lossy (fps) | high lossy (fps) |

|---|---|---|---|---|---|

| 1024x768 | 10GB-> Infiniband | 250 | 220 | 300 | 250 |

| 10GB-> 1GB | 250 | 220 | 300 | 250 | |

| 1GB | 50 | 100 | 145 | 220 | |

| 1920x1080 | 10GB-> Infiniband | 130 | 120 | 200 | 130 |

| 10GB->1GB | 130 | 120 | 200 | 130 | |

| 1GB | 20 | 40 | 50 | 130 | |

| 4096x2160 | 10GB-> Infiniband | 40 | 40 | 55 | 40 |

| 10GB-> 1GB | 40 | 40 | 55 | 40 | |

| 1GB | 5 | 10 | 15 | 40 | |

| 8192x4320 | 10GB-> Infiniband | 10 | 12 | 14 | 13 |

| 10GB-> 1GB | 10 | 12 | 14 | 13 | |

| 1GB | 1 | 2 | 3 | 10 |

We have tested Mellanox and QLogic/Intel Infiniband cards. For 10 GB Ethernet, we used an Intel X540-T1 network card.

VRED will try to use as much bandwidth as possible. This can cause problems with other users on the same network. Otherwise, that is, large file transfers on the same network will slow down the rendering. If possible, try to use dedicated network infrastructure for the cluster.

Start the Cluster Service

For cluster rendering, a rendering server must be started on the rendering node. To be able to automate this process, VREDClusterService is used. This program starts and stops cluster servers on demand.

By default, the service listens on port 8889 for incoming connections. Only one service can be started for a single port at a time. If there should be multiple services started, then each service must use a different port. Use the -p option to specify the port.

For testing, VREDClusterService can be started manually. Adding the options -e -c will produce some debug information on the console.

On Windows, the VRED installer will add a system service "VRED Cluster Daemon". This service is responsible for starting VREDClusterService on system startup. You can check this by selecting "view local services" from the Windows Control Panel. The path should point to the correct installation, startup type should be "automatic", and service status should be "started".

On Linux, the service must be started by adding bin/vredClusterService to the init system or by running bin/clusterService manually.

-

Click Start Cluster at the bottom of the dialog box.

This starts the VREDClusterService in Windows. This service will process the rendering, instead of VRED itself.

-

In the Main Menu, select Rendering > Render Settings and enter a path to which to save the rendering.

-

In the Render Settings dialog box, click Render.

The Cluster Snapshot dialog box opens. It shows you a preview of the rendering and its progress.

-

At any point during the rendering process, select Update Preview in the Cluster Snapshot dialog box to update the preview image.

-

You can click Cancel & Save at any point to abort the rendering and save its current state to a file, or wait until the rendering is finished and click Save.

Disable a Host From the Cluster Service

You can disable single hosts from the cluster service. The excluded hosts do not check out a render node license.

- In the Main Menu, choose Rendering > Render Settings to open the Render Settings dialog box.

- In the Render Settings dialog box, scroll to the Cluster section and select Enable Cluster.

- Enter the host names and add

[RT=0]after the hosts to disable.

VRED Cluster on Linux

Extracting the Linux Installation

The Linux VREDCluster installer is shipped as a self-extracting binary. The filename of the package contains the version number and a build date. To be able to extract the content to the current directory, run the installer as a shell command.

sh VREDCluster-2016-SP4-8.04-Linux64-Build20150930.sh

This creates the directory VREDCluster-8.04, containing all the files required for cluster rendering. Each cluster node must have read permission to this directory. It can be installed individually on each node or on a shared network drive.

To be able to connect a cluster node, the cluster service must be started. This can be done by calling the following command manually or by adding the script to the Linux startup process.

VREDCluster-8.04/bin/clusterServiceAutomatically Start VRED Cluster Service on Linux Startup

On Linux, there are a many ways for starting a program on system startup. There are slightly different ways for each Linux distribution. One possible solution is to add the start command to /etc/rc.local. This script is executed on startup and on each runlevel change.

If the installation files are, for example, stored in /opt/VREDCluster-8.04, then rc.local should be edited as follows:

#!/bin/sh -e

killall -9 VREDClusterService

/opt/VREDCluster-8.04/bin/clusterService &

exit 0If you do not want to run the service as root, you could start the service, for example, as vreduser.

#!/bin/sh -e

killall -9 VREDClusterService

su - vreduser '/opt/VREDCluster-8.04/bin/clusterService &'

exit 0Another option to start the VRED service on system startup is to add an init script to /etc/init.d. Copy the init script from the installation directory bin/vredClusterService to /etc/init.d. Then, you have to activate the script for different run levels.

On Redhat or CentOS Linux use: chkconfig vredClusterService on

On Ubuntu use update-rc.d vredClusterService defaults

Connecting a License Server

The address of the license server must be stored either in a config file in your home directory .flexlmrc or can be set as an environment variable ADSKFLEX_LICENSE_FILE. If the server is named mylicenseserver:

~/ .flexlmrc should contain: ADSKFLEX_LICENSE_FILE=@ mylicenseserver

or add to your login script: export ADSKFLEX_LICENSE_FILE=@ mylicenseserver

Make sure to setup the environment for the user that starts the cluster service.

Accessing Log Information

Log files will be written to the directory /tmp/clusterServer/log. Log files should be viewed with a web browser. For trouble shooting, it could be helpful to start the cluster service in console mode. Stop a running cluster service and start bin/clusterService -e -c manually. Now, all debug and error messages are written to the console.

Useful Commands

Check, if the service is running: ps -C VREDClusterService

Stopping the cluster service: killall -9 VREDClusterService

Stopping a cluster server: killall -9 VREDClusterServer

Fully Nonblocking Networks/Blocking Networks

A fully nonblocking network allows all connected nodes to communicate with each other with the full link speed. For a 16 port gigabit switch, this means that the switch is able to handle the traffic of 16 ports reading and writing in parallel. This requires an internal bandwidth of 32 GBit/s. VRED will work best with nonblocking networks. For example, the transfer of the Scene Graph is done by sending packages from node to node, like a pipeline. This is much faster than sending the whole scene directly to each node.

If possible, do not use network hubs. A hub sends incoming packages to all connected nodes. This will result in a very slow Scene Graph transfer.

On large clusters, it could be very expensive to use fully nonblocking networks. If the cluster nodes are connected to multiple switches, then a good performance could be reached by simply sorting the rendering nodes by the switches they are connected to.

For example, if you have a cluster with 256 nodes, these nodes are connected to 4 switches. The worst configuration would be:

Node001 Node177 Node002 Node200 Node090 ...

A better result could be achieved with a sorted list:

Node001 - Node256

If this configuration causes any performance problems, you could try to model your network topology into the configuration:

Node001 { Node002 - Node064 }

Node065 { Node066 - Node128 }

Node129 { Node130 - Node192 }

Node193 { Node194 - Node256 }With this configuration, the amount of data that is transferred between the switches is efficiently reduced.

Troubleshooting

Unable to Connect a Rendering Node

- Use ping hostname to check if the node is reachable.

- Try to use the IP-Address instead of the host name.

- The port 8889 must be allowed through the firewall.

- VREDClusterService must be started on the rendering node. Try to connect over a web browser with http://hostname:8889.

Unable to Use Infiniband to Connect Cluster Servers

- Communication over Infiniband without an IP layer is only supported on Linux.

- The Infiniband network must be configured properly. Use ibstat to check your network state or use

ib_write_bwto check the communication between two nodes. - Check

ulimit -l. The amount of locked memory should be set to unlimited. - Check

ulimit -n. The number of open files should be set to unlimited or to a large value. - If it's not possible to run

VREDClusterServeroverInfiniband, in most cases,IPoverInfinibandcan be used with very little performance loss.

Occasional Hangings by Otherwise High Frame Rates

- Maybe the network is used by other applications.

- Try to change network speed to Auto.

- Try to reduce image compression or set image compression to Off.

The Frame Rate Is Not as High as Expected

- If the CPU usage is high, rendering speed is limited by the CPUs. Frame rates can be increased by reducing the image quality.

- If the CPU usage is low and the network utilization is high, then enabling compression could provide higher frame rates.

- Small screen areas with very complex material properties can lead to a very bad load balancing between the rendering nodes. The result is a low CPU usage and a low network usage. The only option, in this case, is to locate these hot spots and reduce the material quality.

Why Is the Network Utilization Less Than 100%

-

If the rendering speed is limited by the CPU, then network utilization will always be less than 100%. Providing a faster network will, in this case, not provide a higher frame rate.

-

Try to use tools like iperf to check the achievable network throughput. On 10 GB Ethernet, VRED uses multiple channels to speedup communication. This could be simulated with

iperf -P4. Check the throughput in both directions. -

Network speed is limited by the used network cards, and also by the network switches and hubs. If the network is used by many people, then the throughput can vary strongly from time to time.

-

Tests have shown that it is very difficult to utilize 100% of a 10 GB Ethernet card on Windows. Without optimization, you can expect 50%. You can try to use measurement tools and adjust performance parameters for your network card. If the rendering speed is really limited by your network, then you should set image compression to "lossless" or higher.

-

For Intel 10GB X540-T1 Ethernet cards, the following options could increase the network throughput on Windows:

- Receive Side Scaling Queues:

Default = 2, changed to4 - Performance Options / Receive Buffers:

Default = 512, changed to2048 - Performance Options / Transmit Buffers:

Default = 512, changed to2048 - Sometimes installing the network card into another PCI slot can increase the network throughput.

- Receive Side Scaling Queues:

-

On Linux, you could increase the parameters

net.ipv4.tcp_rmem,net.ipv4.tcp_wmem,net.ipv4.tcp_memin/etc/sysctl.conf.

Why Is the CPU Utilization Less Than 100%

- If the rendering speed is limited by the network, CPU utilization will not reach 100%.

- To distribute the work between multiple rendering nodes, the screen is split into tiles. Each node then renders a number of these tiles. In most cases, it is not possible to assign each node exactly the same amount of work. Depending on the scene, this can lead to a low CPU utilization.

Mellanox Infiniband With Windows

-

With the Mellanox

MLNX_VPI_WinOFinfiniband driver for Windows, it is possible to use TCP over Infiniband to connect cluster nodes. Use the following network settings:- Network: selected

- Network type: Ethernet

- Network speed: 40 GB

- Encryped: Not selected

-

It is not possible to connect to a Linux network if Linux is configured to use "Connected Mode". Make sure the MTU on all connected nodes is equal.