Hands in XR

Hands are used for gestures and to provide a more immersive experience by delivering a more natural feel when interacting with a 3D scene, whether in VR or in a real-world setting through MR.

Hand Gestures

Only supported for the Varjo XR-3.

This section provides the supported VRED hand gestures for interacting with your digital designs. Your hand movement is tracked as you tap parts of a model with your index finger to initiate touch events, select tools from the xR Home Menu, or interact with HTML 5 HMI screens. Use these gestures to navigate scenes, without the need for controllers.

The virtual hands will automatically adjust to match your real-world ones.

Touch Interactions

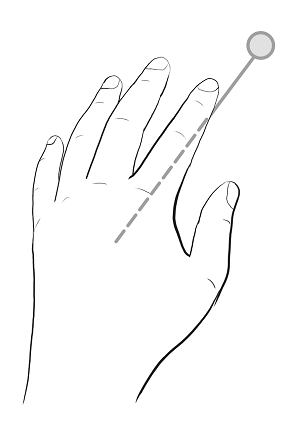

Use your index finger’s tip (on either hand) to touch and interact with scene content, such as the xR Home Menu, objects with touch sensors, or HTML 5 HMI screens.

xR Home Menu

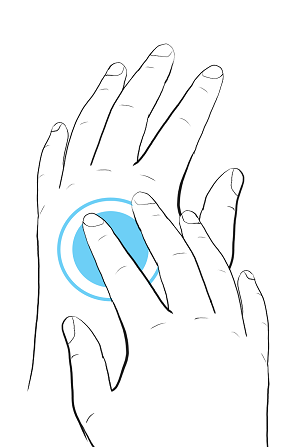

To open and close the xR Home Menu, use the same gestures.

Move your index finger to the palm of your other hand. This opens the menu. Now you can use your index finger to select an option from the menu. Repeat the initial gesture to close the xR Home Menu.

For information on the xR Home Menu and its tools, see xR Home Menu.

If your hands are in front of a virtual object, but are occluded by it, enable Hand Depth Estimation.

Teleport

To teleport, you will need to initiate the teleporter, orient the arc and arrow, then execute a jump to the new location. When finished, terminate the teleporter.

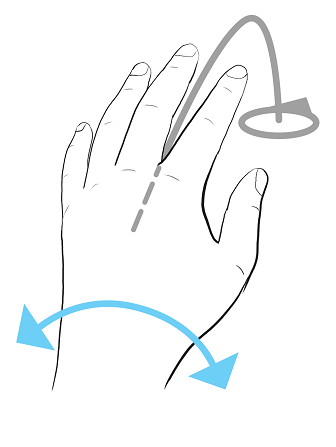

Initiating and Terminating Teleport

To initiate and terminate teleporting, use the same gestures.

Using one hand, tap the back of the other hand. This initiates the teleport from the tapped hand. Now orient the arc and teleport. Repeat this gesture to terminate the teleporter.

Teleport Orientation

Rotate your wrist, while the teleport arc is displayed, to orient the teleporter. Now teleport.

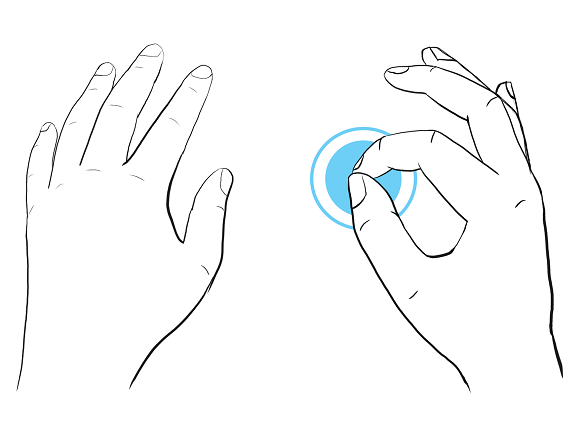

Teleporting

With the hand not currently displaying the teleport arc, pinch your index finger to thumb. This accepts the teleport arc location and orientation, executing the teleport. When finished, terminate the teleport to exit the tool.

For information on teleporting, see Teleporting.

Laser Pointer

To use the Laser Pointer, you will need to initiate, execute, then terminate it.

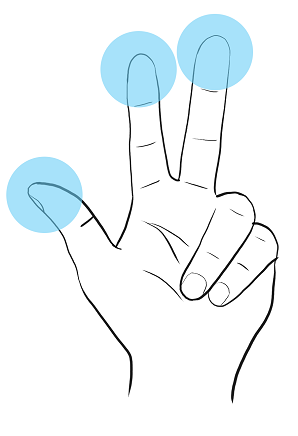

Initiating and Terminating the Laser Pointer

To initiate and terminate the Laser Pointer, use the same gestures.

Point your thump, index, and middle fingers out with palm facing towards camera. Now, use the laser pointer to point to things or trigger interactions with scene content. Repeat this gesture to terminate the Laser Pointer.

Using the Laser Pointer

Use your index finger to point at scene content.

Pinch your index finger to thumb together to trigger interaction with scene content, such as selecting tools from the xR Home Menu, activating a touch sensor, or interacting with HTML 5 HMI screens. When finished, terminate the Laser Pointer to exit the tool.

Hands in VR

VRED comes with one set of standard hands, which support joints/bones with pre-skinned vertices for hand representation. This makes for a smoother transition between hand poses. Gestures are driven by an HMD-specific controller setup.

VRED comes with one set of standard hands, which support joints/bones with pre-skinned vertices for hand representation. This makes for a smoother transition between hand poses. Gestures are driven by an HMD-specific controller setup.

An X-Ray material is used for the hands to improve the experience.

To enter VR, select  (the VR feature) in the xR Home Menu.

(the VR feature) in the xR Home Menu.

Using Hands in VR

When activating the Oculus Rift or OpenVR mode, one hand appears per tracked controller.

VRED uses the OpenVR 1.12.5 SDK.

You can use it to interact with WebEngines and touch sensors in the scene. Only the index finger can be used for interaction. When the finger touches interactable geometry, a left mouse press/move/release is emulated for WebEngines and touch sensors on that geometry.

When using a script with vrOpenVRController, the hands will disappear automatically. You can enable them again with controller.setVisualizationMode(Visualization_Hand).

For example scenes with touch sensors and WebEngines to interact with:

-

When working in OpenVR and in Oculus Rift mode:

-

VR-hands-touchsensor.vpb- Touch the car shape with the index finger to toggle the material (touch sensor on car shape). -

VR-hands-touchsensor-2.vpb- Touch the car shape with the index finger to show 3 spheres, which can be touched to change the material (touch sensors on car shape and on spheres). -

VR-hands-webengine.vpb- Touch the calculator buttons with the index finger. The calculator material is connected to a web engine. Touching the web engine geometry with the index finger triggers a mouse events on the web site.

-

-

When working in OpenVR:

-

VR-hands-webengine-buttonVibration-openvr.vpb- It is the same asVR-hands-webengine.vpb, however, there is controller vibration triggered by the website. The calculator web engine has javascript code that sends arequestVibration()python command to the VRED WebInterface, when a button on the web site is hit. This triggers haptic feedback (vibrations) on the connected controller device.

-

How to Make Nodes Interactable

Nodes in the scene are not interactable, by default. All nodes you want to interact with need to be made "interactable" with the Python command setNodeInteractableInVR(node, True). Please view the Python documentation (Help > Python Documentation) for more details. Enter the command in the Script Editor, press Run. The setNodeInteractableInVR command is not persistent and needs to be executed every time you load a scene. We recommend you save the scene with the script, so the nodes are interactable the next time you open the scene.

VR Hand Poses

Besides using your fingers and hands for pressing and touching buttons on the HTC VIVE or Oculus Touch Controllers, use them to communicate with others.

Use the following poses:

- Forefinger to point and touch objects

- Thumb's up/down to communicate if something is good or bad

- Open hand for waving

- Pinch and pick up objects

- Clenched Fist

If you have a Varjo XR-3, there are gestures for interacting with your digital designs. See Hand Gestures.

Customization for VR Hands

Use Python scripting and HTML5 to create custom setups. You can find script files in the VRED Examples folder (C:\\ProgramData\\Autodesk\\VREDPro-11.0\\Examples).

Here are some of the added scripts:

-

To enable haptic feedback (controller vibration) when a hand touches an interactable node.

-

VR-hands-vibrate-openvr.py- For OpenVR mode -

VR-hands-vibrate-oculus.py- For Oculus Rift mode

-

-

To change hand color / hit point color

VR-hands-color-openvr.pyVR-hands-color-oculus.py

-

To interact from a distance (instead of directly touching something) by just pointing with the index finger at nodes in the scene. A sphere appears at the hit point. When activating the "Pointing" pose (Grip button pressed, Index trigger button not pressed), touch sensors and web engines can be executed.

-

VR-hands-pointing-openvr.vpb- For OpenVR mode

-

Hands in MR

Currently, only Varjo XR HMDs are supported for MR.

In MR (mixed reality), VRED replaces the VR X-Ray material hands with your own.

In MR (mixed reality), VRED replaces the VR X-Ray material hands with your own.

To enter MR, select  (the MR feature) in the xR Home Menu.

(the MR feature) in the xR Home Menu.

Be aware, there are differences in the supported functionality for the XR-1 and XR-3 in MR.

-

The XR-1 supports hand depth estimations and marker tracking.

-

The XR-3 supports hand depth estimations, marker tracking, and hand tracking (gestures). This means hand gestures can be used for scene interaction in place of controllers. Since VRED is replacing the standard hands which support joints/bones with your real-world hands, you can use Python to connect hand trackers for scene interaction.

Tip:Use the Virtual Reality preferences to set default behaviors for hand tracking, teleporting, marker tracking, and your HMD.

Connecting Hand Trackers with Python

Only available for Varjo XR-3 users.

To see how to connect hand tracking using Python, select File > Open Examples and navigate to the vr folder, where you'll find externalHandTracking.py. This provides an example for how to set hand tracking.

- For the left hand use

vrDeviceService.getLeftTrackedHand() - For the right hand use

vrDeviceService.getRightTrackedHand()

The returned object is of type vrdTrackedHand.

Use Tracked Hands in VR

Only available for Varjo XR-3 users.

Use the Virtual Reality preferences to set the default behavior for hand tracking in VR. Choose from Varjo Integrated Ultraleap for hand tracking in MR or Custom to use other hand tracking devices. To adjust any offset between the tracked hands and the hands rendered in VR, use Translation Offset and Rotational Offset.

Setting up Hand Tracking for Other Devices

For the Custom Tracker option, you must provide all the tracking data to VRED's Python interface. How to do this can vary from device to device; however, if you can access the tracking data via Python script, the data needs to be set into the vrdTrackedHand objects returned by the methods used. This requires the transformation data of the tracked hand and/or different finger joints (see the externalHandTracking.py example file for how this works).

For testing, set the corresponding preferences, load the script, and enter VR. You may have to modify the script by changing values for hand and/or joint transforms to understand how everything works.

Hand Depth Estimation

Currently, only Varjo XR HMDs are supported for MR.

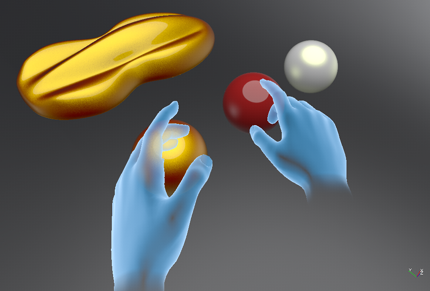

Detects your real-world hands in the mixed reality video and shows them in front of a virtual object, if they are closer than the object. When disabled, the rendering from VRED will always occlude the real hands, even if the hands are closer than the rendered object.

Detects your real-world hands in the mixed reality video and shows them in front of a virtual object, if they are closer than the object. When disabled, the rendering from VRED will always occlude the real hands, even if the hands are closer than the rendered object.

Find this option in the xR Home Menu. To set a default behavior for this option, visit the Virtual Reality preferences > HMD tab > Varjo section.

| Hand Depth Estimation OFF | Hand Depth Estimation ON |

|---|---|

|

|

Displaying Your Hands in Mixed Reality

Currently, only Varjo XR HMDs are supported for MR.

Use the Hand Depth Estimation tool to display your hands, while in mixed reality.

-

Wearing a connected Varjo HMD that supports MR, in VRED, select View > Display > Varjo HMD.

-

Press the Menu button on your controller to access the xR Home Menu.

-

Press

to see your real-world hands in MR.

to see your real-world hands in MR.