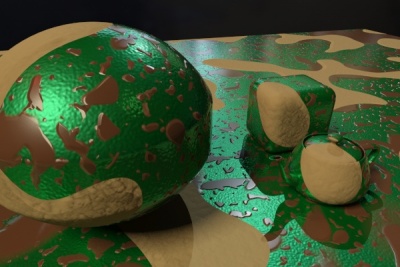

This image is rendered with simple math color combining.

This rendered in 6 minutes

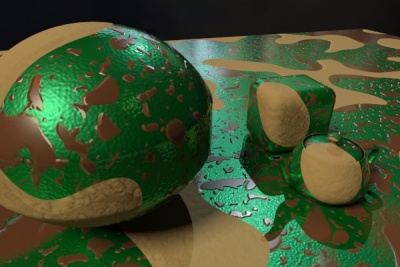

This is the exact same scene rendered with the layering shaders,

it rendered in 58 seconds

This page contains the background discussion on the layering shaders.

Layering is commonly used in computer graphics to create more complex and visually appealing materials. From blends of materials, to simple coated materials (e.g., shellac on wood, wet stone, etc.) to more complicated combinations (e.g., mud-splatted carpaint, where the mud is a layering of a water layer on a diffuse mud layer, splatted across a multi-layered carpaint with clearcoat, pigment, flakes, etc.).

Note We are talking about physically plausible layering of materials here, and NOT talking about layering in a "Photoshop Sense", with its variety of "blend modes" including those that do not naturally fit a physical basis. Such may be useful for layering textures to create an effect, but are NOT useful when applied to the physics of blending materials.

From a production requirements perspective, the layering shaders must:

Questions of efficiency arise when layering shaders with a simple color combining shader, such as mib_color_mix. Visually, of course, it may provide a desired look, but has several issues.

Most importantly, as complexity increases, performance efficiency decreases geometrically. We explain this with an example.

This image is rendered with simple math color combining. This rendered in 6 minutes |

This is the exact same scene rendered with the layering shaders, it rendered in 58 seconds |

The images above show a scene with a material consisting of several layers.

For a more challenging scene, the differences can be much more dramatic - especially in enclosed spaces where runaway ray-counts for reflections grow geometrically and can literally cause 10x or 100x slowdowns, easily.

A similar test scene using lots of transparency had a difference between 22 seconds (with layering) and 15 minutes (without).

The layering shaders consist of a set of C++ mental ray shaders designed to work together, a shader kit; this kit has specialized component combining shaders, and a set of component shaders that provide a variety of shading component models. The implementation derives from both the mia_material and technology developed across rendering products by NVIDIA ARC. In particular, the component elements are similar to what is available in the NVIDIA Material Definition Language (MDL). Because there is no predefined layer count, one can enhance any shading model with more elemental components, such as with extra glossy or scatter components.

Below we examine the issues and how we address them with the layering library:

Imagine mixing three shaders: 80% of one, 20% of another, and 0% of a third. If plugged into a dumb math node, all three shaders will execute fully. So if all three shaders use 50 sample rays for glossy reflections, the render would shoot 150 rays, since the math mode executes all three.

Furthermore, when these glossy reflection rays hit a secondary surface mixed similarly, the math mode on the secondary surface executes all of its input shaders. And even if the individual shaders plugged into the mixer are clever like mia_material and only shoot a single ray when in a higher trace depth, it will still shoot three rays if there are three of those shaders input to the mix.

This may not sound like much, but in a geometrically expanding ray-tree, this quickly becomes completely unwieldy - the ray tree multiplying by three at every trace depth junction.

This may prove costly, and often with little visual benefit. It may also counteract the use of unified sampling which spends time in areas of most visual benefit, based on each eye ray result. Ideally, in this case we want minimum secondary ray tracing, using the unified sampling adaptivity to focus where quality needs to be improved.

The problem is that the component shaders are not executed in relation to their importance. The first shader doesn't know it's visible to 80%, nor the second that it is 20%, nor the third that it is completely useless and don't need to be called at all. All three are blindly executed as if they were the only shader representing the material.

To match visual importance, we'd prefer the first shader to use something like 80% of its rays, the second something like 20%, and the third shader not to be executed at all.

Because all the component inputs of the combining shaders are of type shader, they can be executed on demand (and not at all for our 0% example). In addition, the state->importance variable is adjusted prior to the call, such that the shader being called can make choices based on this variable to e.g. reduce the ray-count for glossy reflections and similar. Finally, once we are below the first hit in trace depth, we only trace one of the component's secondary rays based on the probability determined by the combining weights. This design to take advantage of the unified sampling model.

As explained above, this can result in anything from 10x to 100x speedups in renderings of scenes with complex layerings, due to the nature of the geometrically growing number of rays, and spending time only where visually necessary.

Similar to rays, light sampling provided by traditional light loops can be problematic.

If we layer three shaders, that each contain a light loop, there will be three light-loops. Each run through the light loop will cause a shadow-ray trace for every light sample, which can quickly become very very time consuming. After all, in most scenes, shadow-ray count dominates, because every type of ray besides an environment ray cause traced shadow rays.

This issue is solved by providing a layering shader specific API to provide light samples. This stores light samples, and only re-runs the the light sampling if so directed. Otherwise, it automatically re-uses the light samples after first run through the loop. So each component can iterate over the light samples, re-using their values, without causing shadow rays to be traced again and again and again.

Speed benefit can be great (2x-20x) in scenes containing lots of transparency, because each shadow ray breaks down into many small ray segments, increasing the performance slowdown even greater.

Though easy to do, the simple combining of single output shader components most likely ignores outputs from the more complex multiple output component shaders.

While one may combine secondary outputs through other 'math nodes', different shaders have different styles of outputs, and only certain kinds of outputs can be legally mixed in the first place. The complexity in the shader network becomes difficult to maintain for practical projects.

A layering shader specific API provides output to a variety of lighting pass specific framebuffers from within each component shader. The specification for its use derives from the Light Path Expressions (LPE) introduced in NVIDIA Material Definition Language (MDL). Light gathered from specified light paths may be accumulated into user framebuffers. Through string options one may identify to which user framebuffer a given supported light path may be written. More detail on the LPE passes supported is here.

The outputs are written after being weighted, so that they are additive. In other words, all the passes can be combined by adding them up. Many other shaders (like mia_material) have 'raw' and 'level' style outputs, and while these are useful on the output of a single shader, these cannot be combined; you cannot easily postpone the multiplication of a 'raw' and 'level' value to compositing, as the act of filtering samples to pixels creates an irreversable mathematical complexity, difficult to address in post.

The framebuffer writing mechanism also needs to handle transparency as described in Framebuffers.

To achieve this, the shaders that write to framebuffers need to blend in values that come from a call of mi_trace_transparent(). However, if the calls to mi_trace_transparent() live in leaf shaders in the shade tree, this will not be possible to control in the proper order (as the shade tree may contain several different calls to mi_trace_transparent())!

We now want the framebuffers written as per the Framebuffers page. This is solved with special handling in mila_material, which will:

In mental ray, photon- and shadow-shaders behave inherently differently to normal surface shaders, and just wiring outputs of two surface shaders do not turn them into a legal or even working photon shader, and may or may not work as a shadow shader (luck, basically).

The layering shaders separate photon and shadow shader function, with simplified component functionality.