Texture creation and texture manager

A unified mechanism for hardware (GPU) texture creation and management is exposed in the API. The textures are cached for reuse regardless of whether they are internally or externally loaded, and all memory management is handled internally.

Textures can be created either from an image on disk or via a block of pixels in CPU memory. The types of textures and the number of formats supported are much greater than any of the legacy interfaces. The interfaces are also draw API agnostic as opposed to legacy interfaces that only support OpenGL.

A texture is represented by the class MTexture and the management is handled by the class MTextureManager.

There are three basic interfaces for acquiring a texture:

- Acquiring a texture from a file on disk: For this method, the currently available image code types are supported. If any custom plug-in codecs have been added via the IMF plug-in interface, they are also supported. The description of the image loaded is described by the class MTextureDescription. MTextureDescription itself is not a resource but a resource description.

- Acquiring a texture from a block of pixels residing in CPU memory: In this case an MTextureDescription is a required input to describe the pixels. It is the responsibility of the plug-in writer to provide the correct description for the data. Note that it is possible to allocate a texture referencing a NULL block of data.

- Acquiring a texture based on the output of a Maya shading node plug or node.

The MTextureManager::acquireTexture() and MTexture::update() methods (that accept a file texture node as an input argument) may be preferable to explicitly specifying a file texture name, as these methods internally determine the file name from the file texture node. See the developer kit example hwApiTextureTest for sample code demonstrating the usage of the acquireTexture() methods. See the example viewOverriedTrackTexture for sample code demonstrating the usage of the update() method.

Uniqueness of a texture is provided by the name given to the texture. In the case of images on disk, the file path provides the unique name. Otherwise, the plug-in code is responsible for determining what is unique. On acquisition, if a texture with the same name already exists, then it is returned.

If an empty string is passed as the unique name, then the texture is considered to be “unnamed”. The main difference between named and unnamed textures is that unnamed textures are not cached by the internal texture caching system, resulting in the acquisition creating a new texture instance for each method call.

As it is not the purpose of MTexture and the manager to handle CPU side pixel maps, additional image codecs cannot be added directly via this interface. Instead, existing interfaces such as MImage can be used in conjunction with these interfaces. In fact, any interface which creates a block of pixels in CPU memory can use the interface which accepts CPU data to acquire GPU textures.

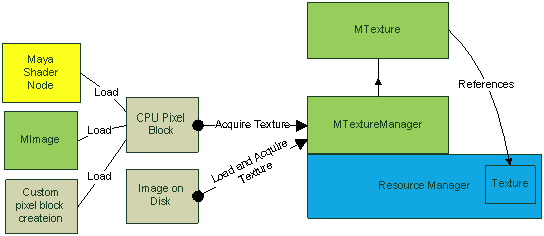

The relationships for texture acquisition are shown in the following diagram:

The most direct scenario is to load images from disk directly into GPU memory. In this scenario, CPU side memory is not persistently held. Other scenarios require a two-step process of allocating a block of pixels in CPU memory and then transferring the data to the GPU. Here, three options are shown: reading from a plug-in on a shader node, reading via the MImage class, and via some custom code.

The GPU handle to textures is accessible and is meant to be read only. As long as the data itself is not deleted or resized it is possible to perform updates directly on textures via this GPU handle. For DirectX11, this is actually a texture handle, for OpenGL this is a texture id (integer). See 2.4.1 Updating color textures and 2.4.2 Depth textures for more formalized interfaces for texture updating.

The general use case for textures is to bind to shaders. As an example, an MTexture instance can be bound to a texture parameter on an MShaderInstance. If a texture is bound to a shader instance, this information is used during render item categorization phase. For example, setting the texture to indicate that it has semi-transparent pixels (between 0 and 1) can be used to categorize a render item as being transparent. There are also other query and set methods for checking different alpha channel properties of a texture.

Updating color textures

Continuously re-acquiring texture instances in order to replace their contents is not recommended, as this can be expensive. This is especially true for unnamed textures, as each acquisition results in a new texture being allocated. If it is known that a texture can be reused, then it is better to acquire it once from the texture manager and then update it using the MTexture::update() interfaces.

The re-generation of mip-maps should be done as necessary to avoid any noticeable performance costs due to this computation. By default, mip-maps are automatically recomputed if the texture already contains mip-maps.

The update mechanisms are currently restricted to the updating of 2D non-array textures.

Raw data update interfaces

The fastest interface to use is the one that accepts a reference to raw data. This data can either be newly created in CPU memory, or data that is extracted from an existing MTexture or MRenderTarget.

Whether newly created or not, it is important to use the appropriate row pitch as the row pitch may not be equal to the length of a texture row. Row padding concerns mainly affect textures that are created using the DirectX API. For example, if the data is extracted from an existing texture, the row pitch information extracted via the MTexture::rawData() method should be used. For new raw data, the row pitch information can additionally be computed based on the bytes-per-pixel method (MTexture::bytesPerPixel()) and the width for a texture.

If the data is being extracted from a target via MRenderTarget::rawData(), the target may or may not be a texture and it is thus best to use the returned row pitch value. Note that a target (MRenderTarget) can be created with data from an on screen buffer (for example, a DirectX11 SwapChain) via the MRenderTargetManager::acquireRenderTargetFromScreen() method. A proper row pitch value should still be used in this case.

The basic cost of writing new data is the upload of CPU data to GPU texture. This should not be a “blocking” operation, meaning no data is read back from the GPU. For example, for OpenGL writers, the upload can be regarded as the equivalent of calling glTexSubImage*().

Reading back from an existing texture is blocking, since the memory needs to be mapped back to CPU memory from GPU memory.

MImage update interface

Data from an existing MImage can also be used to update an existing MTexture. It is the responsibility of the caller to ensure that the format of the texture matches that of the MImage. This is currently 4-channel, 8 bytes-per-channel RGBA color. The bytesPerPixel() method can be used to check for a match.

Using the MImage interface requires mapping GPU memory to the CPU, updating it and then mapping it back to the GPU.

“Backdoor” updating via GPU handle

As GPU resource handles (MTexture::resourceHandle()) are available, it is also possible to perform the update using these handles. For example, GPU to GPU resource memory transfers are not exposed in the API, but can be performed using the raw resource handles.

It is strongly recommended that no format change or data size occurs via operations on this handle, as the resource is owned by the internal renderer, and would never know that any of these attributes have been modified.

Texture update examples

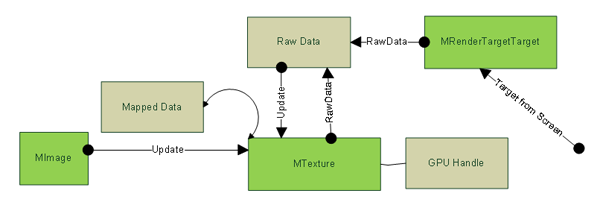

The following diagram illustrates the interaction between the various update possibilities.

Figure 29: On the left, an MImage could be used to upload new context to the MTexture. Part of this data is mapped back to the CPU and the new data copied over. The flow of data shown above the MTexture illustrates possible reading back of the raw data, and the possible updating of the texture using raw data. The top right shows that the raw data can also be read from an offscreen render target. The bottom right illustrates the ability to access the GPU handle in order to update the texture.

The following is an example which reads back the raw pixel data from a texture, inverts the pixels and then writes the data back to the texture. The texture here is assumed to have a format of 24-bit fixed point RGBA.

// Get the texture description from the texture MHWRender::MTextureDescription desc; texture->textureDescription(desc); unsigned int bpp = texture->bytesPerPixel(); // This code happens to only work with fixed-bit 8888 RGBA // if (bpp == 4 && (desc.fFormat == MHWRender::kR8G8B8A8_UNORM || desc.fFormat == MHWRender::kB8G8R8A8)) { int rowPitch = 0; int slicePitch = 0; bool generateMipMaps = true; // Extract out the raw data from the texture. Also gets the row and slice pitch // The assumption is that this is 2D texture so slicePitch would be 1. // A GPU->CPU transfer of data will occur. unsigned char* pixelData = (unsigned char *)texture->rawData(rowPitch, slicePitch); unsigned char* val = NULL; if (pixelData && rowPitch > 0 && slicePitch > 0) { // Do some example operation: invert the pixel values (255-value) for (unsigned int i=0; i<desc.fHeight; i++) { val = pixelData + (i*rowPitch); for (unsigned int j=0; j<desc.fWidth*4; j++) { *val = 255 - *val; val++; } } // Update the texture. A CPU to GPU transfer of data will occur texture->update(pixelData, generateMipMaps, rowPitch); } delete [] pixelData; }

The sample plug-in hwAPITextureTest uses the above inversion code to update textures read from disk, blits the result to the screen, and then extracts raw data from an on screen target to transfer back to a texture. The texture is then written to disk.

The sample plug-in viewImageBlitOverride demonstrates the update of a color texture for each refresh of a render override (MRenderOverride).

Depth textures

Some interfaces exist to allow for the creation and usage of depth textures. Use of these interfaces is not mandatory, as there is nothing dictating either the format or the manner in which depth textures are used.

Maya has an internal format for storing depth values relative to a camera either in memory or on disk. This is exposed as part of part of the Maya IFF file format and can be written out by a renderer and / or read from disk via API classes such as MImage.

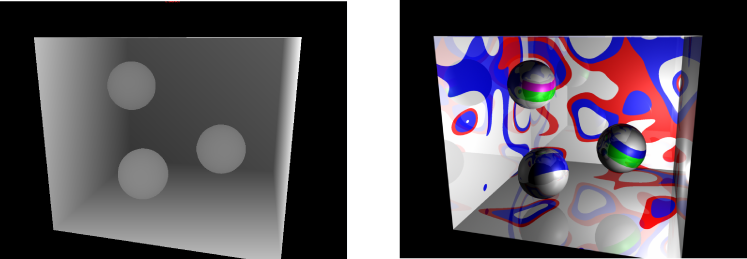

The following image shows the color and depth from a single .iff file.

As a convenience, the texture manager supports reading of depth images to create "depth textures". The internal format of such textures is a single channel 32-bit floating point stored in the red channel (R32F). The MTextureManager::acquireDepthTexture() methods can either accept reading the depth data from an MImage or from a block of raw pixels.

Also as a convenience, the data may be normalized at creation time to the [0…1] range. In order to perform the normalization, an instance of a normalization descriptor is required (MDepthNormalizationDescription). The default constructor set member data to the values associated with a default perspective camera. The normalization process assumes that the input data adheres to the convention used for depth buffers produced by Maya's software renderers or equivalently the depth format used for storage in an MImage.

As there is a cost for normalization, textures can be created unnormalized, and the appropriate shader code can be added to normalize values at render time.

If the data is being provided via an MImage, the method MImage::getDepthMapRange() can be used to extract values to use with an MDepthNormalizationDescription.

The plug-in viewImageBlitOverride shows example code for both generating raw data to fill in a normalized depth texture, as well as reading an existing depth buffer from a .iff file on disk.

From the plug-in, we extract some of the code to demonstrate example steps for creating a depth texture.

If we are only interested in loading the depth data from an MImage, a "dummy" MImage needs to be created with a desired output "target" size. The depth data is extracted from the MImage and used to acquire the depth texture from the texture manager. In this example, CPU side normalization is performed.

// Pre-create a MImage of the output target size. // MImage image; image.create(targetWidth, targetHeight, 4, MImage::kByte); // Load depth image from disk to create the depth texture. The file on disk is assumed to // have depth data embedded. // MString depthImageFileName(“testFile.iff”); if (MStatus::kSuccess == image.readDepthMap( depthImageFileName )) { image.getDepthMapSize( targetWidth, targetHeight ); // Set up normalization structure. // Take into the account the clipping values from the image. // MHWRender::MDepthNormalizationDescription normalizationDesc; float minValue, maxValue; image.getDepthMapRange( minValue, maxValue ); normalizationDesc.fNearClipDistance = minValue; normalizationDesc.fFarClipDistance = maxValue; // Acquire a texture using the depth pixels and normalization structure mDepthTexture.texture = textureManager->acquireDepthTexture("", image, false, &normalizationDesc ); }

For loading from raw data, we simply allocate a block of floating point data and fill it with suitable values. In this case, we create a checker pattern where each tile has a different camera depth.

The code shows the option of whether or not to create normalized values (via the useCameraDistanceValues variable). The code also shows the option of taking that raw data and repackaging it into an MImage in order to use that interface (using the createDepthWithMImage variable).

// Load depth using programmatically created data

//

float *textureData = new float[targetWidth*targetHeight];

if (textureData)

{

// Use 'createDepthWithMImage' to switch between using the MImage

// and raw data interfaces.

//

// Use 'useCameraDistanceValues' to switch between using -1/distance-to-camera

// values versus using normalized depth coordinates [0...1].

// The flag is set to create normalized values by default in order to

// match the requirements of the shader used to render the texture.

//

bool createDepthWithMImage = false;

bool useCameraDistanceValues = false;

// Create some dummy 'checkered' depth data.

//

float depthValue = useCameraDistanceValues ? -1.0f / 100.0f : 1.0f;

float depthValue2 = useCameraDistanceValues ? -1.0f / 500.0f : 0.98f;

for (unsigned int y = 0; y < targetHeight; y++)

{

float* pPixel = textureData + (y * targetWidth);

for (unsigned int x = 0; x < targetWidth; x++)

{

bool checker = (((x >> 5) & 1) ^ ((y >> 5) & 1)) != 0;

*pPixel++ = checker ? depthValue : depthValue2;

}

}

// Create a default normalization structure

MHWRender::MDepthNormalizationDescription normalizationDesc;

// Take the raw data and repackage it into an MImage and use that interface

// This is presented as an example only and is not necessary since the

// raw data interface is available.

if (createDepthWithMImage)

{

image.setDepthMap( textureData, targetWidth, targetHeight );

mDepthTexture.texture = textureManager->acquireDepthTexture("",

image, false, useCameraDistanceValues ? &normalizationDesc : NULL );

}

// Take the raw data and repackage it into an MImage and use that interface

//

else

{

mDepthTexture.texture = textureManager->acquireDepthTexture("",

textureData, targetWidth, targetHeight, false,

useCameraDistanceValues ? &normalizationDesc : NULL );

}

// Data not required anymore so can delete it.

delete [] textureData;

}

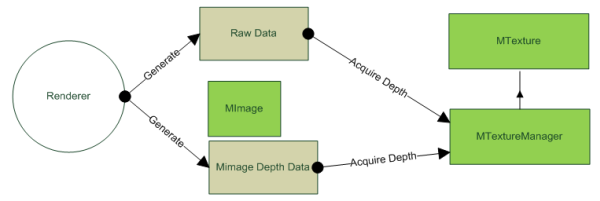

Figure 31: The possible data paths for creating depth textures is shown. The data can be created by a given renderer or by hand. The interfaces to MTextureManager allows for input via raw data or via MImage argument.

Blitting depth textures

The simplest way to transfer the contents of a depth texture to an output target is to use a shader (MShaderInstance).

A sample shader called mayaBlitColorDepth has been provided in effects file format. The shader can be acquired via the shader manager. The shader currently shows the transfer from a R32 input texture containing normalized values to depth output.

As with color textures, the co-ordinate target system is from top-to-bottom. The sample shader performs the vertical flip in V as appropriate. Plug-in writers can choose to create their textures with whatever convention they choose and perform the V flip to be consistent.

This shader is used in the viewImageBlitOverride plug-in example.