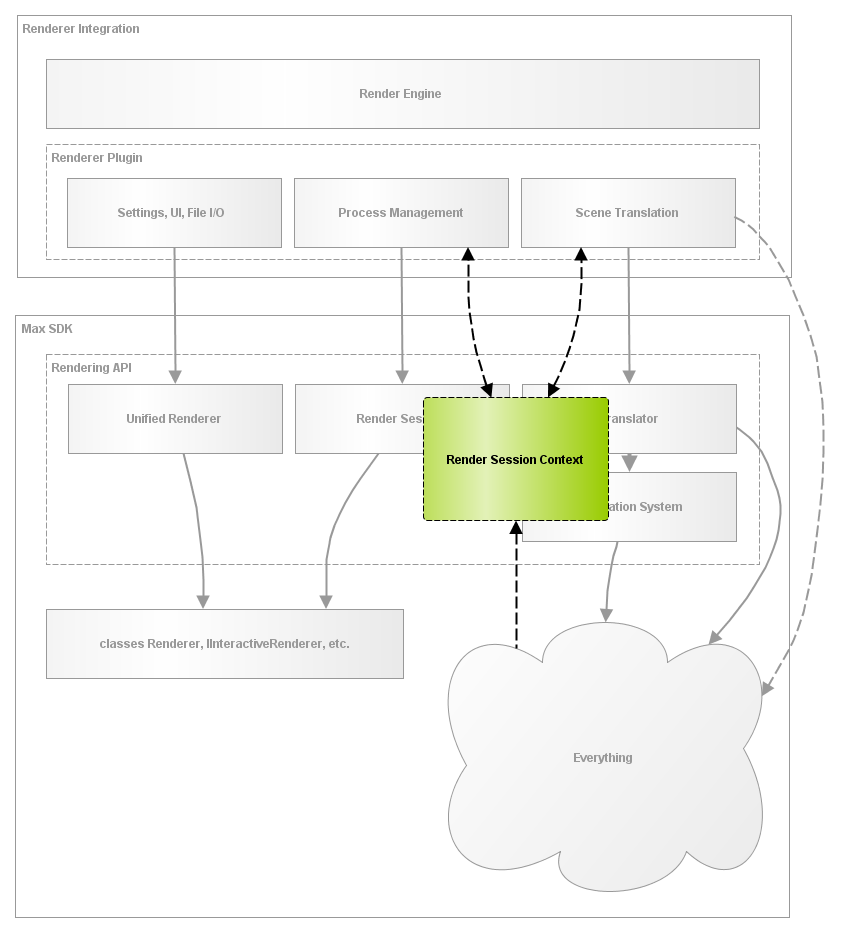

The Render Session Context

The Render Session Context is the interface through which the Unified Renderer and the Render Session communicate. It is the glue that binds the various components of the Rendering API.

The Render Session Context is created by the Unified Renderer. It is a two-way communication channel between the Rendering API core (the system) and the render session (the plugin). It encapsulates the following:

- The scene definition, abstracted into a common, simplified representation - regardless of the render target type (offline or interactive).

- Logging, progress reporting, and process control.

- Frame buffer management.

- Instancing management.

- Notification management.

- Etc.

Scene Definition

The Render Session Context exposes a set of methods for accessing the scene to be rendered:

virtual const ISceneContainer& GetScene() const = 0;

virtual const ICameraContainer& GetCamera() const = 0;

virtual IEnvironmentContainer* GetEnvironment() const = 0;

virtual const IRenderSettingsContainer& GetRenderSettings() const = 0;

virtual std::vector<IRenderElement*> GetRenderElements() const = 0;

virtual std::vector<Atmospheric*> GetAtmospherics() const = 0;

virtual std::vector<Effect*> GetEffects() const = 0;The first four methods return interfaces that are defined by the Rendering API. These further abstract / encapsulate specific aspects of the scene definition.

The Scene Container

Class ISceneContainer exposes the exact set of objects to be rendered:

virtual std::vector<INode*> GetGeometricNodes(const TimeValue t, Interval& validity) const = 0;

virtual std::vector<INode*> GetLightNodes(const TimeValue t, Interval& validity) const = 0;

virtual std::vector<INode*> GetHelperNodes(const TimeValue t, Interval& validity) const = 0;Internally, this interface handles the scene traversal and all object flags or rendering options which may include or exclude specific objects from the render. For example, it handles the fact that hidden geometric objects should be exclude (unless render hidden object is enabled), while hidden light objects should not.

The root node is also available, should the renderer need to do its own traversal:

virtual INode* GetRootNode() const = 0;

The Camera Container

Class ICameraContainer exposes the properties of the camera in a way that abstracts the type of camera being rendered - whether it be a GenCamera object, ViewParam, ViewExp, IPhysicalCamera, or else. The logic for interpreting these different types of cameras/views has always been a source of confusion for plugin developers.

//! \name Camera Projection

virtual MotionTransforms EvaluateCameraTransform(

const TimeValue t,

Interval& validity,

const MotionBlurSettings* camera_motion_blur_settings = nullptr) const = 0;

virtual ProjectionType GetProjectionType(const TimeValue t, Interval& validity) const = 0;

virtual float GetPerspectiveFOVRadians(const TimeValue t, Interval& validity) const = 0;

virtual float GetOrthographicApertureWidth(const TimeValue t, Interval& validity) const = 0;

virtual float GetOrthographicApertureHeight(const TimeValue t, Interval& validity) const = 0;

//! \name Focus and Depth-of-Field

virtual bool GetDOFEnabled(const TimeValue t, Interval& validity) const = 0;

virtual float GetLensFocalLength(const TimeValue t, Interval& validity) const = 0;

virtual float GetFocusPlaneDistance(const TimeValue t, Interval& validity) const = 0;

virtual float GetLensApertureRadius(const TimeValue t, Interval& validity) const = 0;

//! \name Clipping Planes

virtual bool GetClipEnabled(const TimeValue t, Interval& validity) const = 0;

virtual float GetClipNear(const TimeValue t, Interval& validity) const = 0;

virtual float GetClipFar(const TimeValue t, Interval& validity) const = 0;

//! \name Resolution, Region, and Bitmap

virtual IPoint2 GetResolution() const = 0;

virtual float GetPixelAspectRatio() const = 0;

virtual Bitmap* GetBitmap() const = 0;

virtual Box2 GetRegion() const = 0;

virtual Point2 GetImagePlaneOffset(const TimeValue t, Interval& validity) const = 0;The camera can also be accessed directly if needed:

virtual INode* GetCameraNode() const = 0;

virtual IPhysicalCamera* GetPhysicalCamera(const TimeValue t) const = 0;

virtual const View& GetView(const TimeValue t, Interval& validity) const = 0;

virtual ViewParams GetViewParams(const TimeValue t, Interval& validity) const = 0;The camera container also exposes the global motion blur settings:

virtual MotionBlurSettings GetGlobalMotionBlurSettings(const TimeValue, Interval&) const = 0;The Environment Container

The Rendering API introduces a new type of plugin to 3ds Max: the Environment. This expands the traditional environment, represented by a single texture map, through the introduction of separate environment and background textures and colors. Class IEnvironmentContainer exposes these methods:

virtual BackgroundMode GetBackgroundMode() const = 0;

virtual Texmap* GetBackgroundTexture() const = 0;

virtual AColor GetBackgroundColor(const TimeValue t, Interval& validity) const = 0;Class ITexturedEnvironment expands IEnvironment with these methods:

virtual EnvironmentMode GetEnvironmentMode() const = 0;

virtual Texmap* GetEnvironmentTexture() const = 0;

virtual AColor GetEnvironmentColor(const TimeValue t, Interval& validity) const = 0;The Render Settings Container

Class IRenderSettingsContainer exposes various parameters that control he rendering process:

virtual bool GetRenderOnlySelected() const = 0;

virtual TimeValue GetFrameDuration() const = 0;

bool GetIsMEditRender() const;

virtual RenderTargetType GetRenderTargetType() const = 0;

virtual Renderer& GetRenderer() const = 0;

virtual ToneOperator* GetToneOperator() const = 0;

virtual float GetPhysicalScale(const TimeValue t, Interval& validity) const = 0;Logging & Progress Reporting

The Render Session Context provides access to interfaces that specifically handle logging, progress reporting, and more:

virtual IRenderingProcess& GetRenderingProcess() const = 0;

virtual IRenderingLogger& GetLogger() const = 0;Logging

Class IRenderingLogger provides simple message logging functionality. The messages are internally forwarded to the proper systems - for example, to the Production or ActiveShade message windows, as appropriate.

virtual void LogMessage(const MessageType type, const MCHAR* message) = 0;

virtual void LogMessageOnce(const MessageType type, const MCHAR* message) = 0;Progress Reporting & Process Control

Class IRenderingProcess handles progress reporting:

virtual void SetRenderingProgressTitle(const MCHAR* title) = 0;

virtual void SetRenderingProgress(const size_t done, const size_t total, const ProgressType progressType) = 0;

virtual void SetInfiniteProgress(const size_t done, const ProgressType progresstType) = 0;It also handles timing and reporting of milestones:

virtual void TranslationStarted() = 0;

virtual void TranslationFinished() = 0;

virtual void RenderingStarted() = 0;

virtual void RenderingPaused() = 0;

virtual void RenderingResumed() = 0;

virtual void RenderingFinished() = 0;

virtual float GetTranslsationTimeInSeconds() const = 0;

virtual float GetRenderingTimeInSeconds() const = 0;It is responsible for reporting user aborts:

virtual bool HasAbortBeenRequested() = 0;And, finally, this class implements a system for running tasks from the main thread - to be used when, for example, the renderer needs to access the 3ds Max scene, something which only safe when done from the main thread.

virtual bool RunJobFromMainThread(IMainThreadJob& job) = 0;Scene Evaluation

The Render Session Context provides methods for evaluating scene nodes while taking motion blur settings into account:

virtual MotionTransforms EvaluateMotionTransforms(

INode& node,

const TimeValue t,

Interval& validity,

const MotionBlurSettings* node_motion_blur_settings = nullptr) const = 0;

virtual MotionBlurSettings GetMotionBlurSettingsForNode(

INode& node,

const TimeValue t,

Interval& validity,

const MotionBlurSettings* global_motion_blur_settings = nullptr) const = 0;These methods internally fetch all the motion blur properties from the node and the camera, but still allow the renderer to provide its own. The Context also provides methods for handling of RenderBegin() and RenderEnd(). These methods are designed to function correctly with both offline and interactive rendering:

virtual void CallRenderBegin(ReferenceMaker& refMaker, const TimeValue t) = 0;

virtual void CallRenderEnd(const TimeValue t) = 0;Processing of Frame Buffer

The Render Session may store its rendered frame buffer directly, or may use these methods to use the built-in mechanism:

virtual IFrameBufferProcessor& GetMainFrameBufferProcessor() = 0;

virtual void UpdateBitmapDisplay() = 0;Class IFrameBufferProcessor is optimized to make use of threads and can handle processing of the ToneOperator.

Interactive Change Notifications

Classes IRenderSessionContext, ISceneContainer, ICameraContainer, and IRenderSettingsContainer all expose methods for registering notification callbacks - to be called whenever the contents of the container changes. For example, if render selected is being used, and the selection set changes, then ISceneContainer would notify the renderer that the contents of the scene has changed.

virtual void RegisterChangeNotifier(IChangeNotifier& notifier) const = 0;

virtual void UnregisterChangeNotifier(IChangeNotifier& notifier) const = 0;