XR Enhancements and Improvements

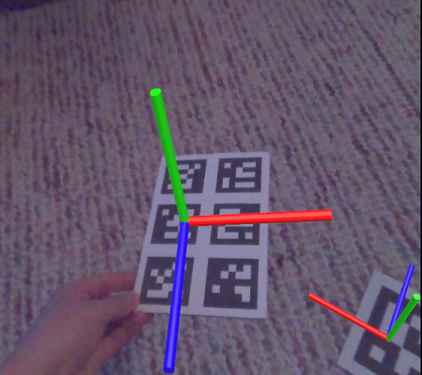

VRED 2022 added Varjo MR marker placement and preferences, hand gestures, a hand depth estimation, along with out-of-the-box object placement in XR and VR via the VR Menu. We also added functions for a Python interface for implementing a custom version of marker tracking. They can be found in the vrImmersiveInteractionService documentation.

Varjo Markers

Only available for Varjo XR HMD users.

Use a visible marker, known as the origin marker, for easy placement of objects in a mixed reality scene. The coordinate system is automatically synced; however, your nodes need to be prepared before entering MR.

Use a visible marker, known as the origin marker, for easy placement of objects in a mixed reality scene. The coordinate system is automatically synced; however, your nodes need to be prepared before entering MR.

Use  from the VR Menu to enable supported HMDs to detect markers. Once the HMD recognizes the marker, if a node is tagged with

from the VR Menu to enable supported HMDs to detect markers. Once the HMD recognizes the marker, if a node is tagged with VarjoMarker, the node content is moved to the marker's location. The marker's ID number needs to be set as a separate tag.

Tag syntax is case-sensitive.

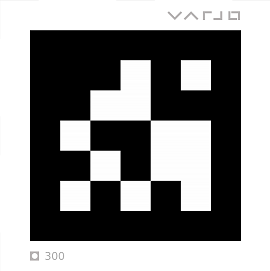

Multiple markers can be combined into a single marker. This improves tracking stability.

For example, multiple nodes are tagged with VarjoMarker, but each has a different tag number. The marker tracking system detects the markers and assigned a confidence value to each, ranging from 0.0 to 1.0. If deems correct, the node content is moved to the marker's location. For more about confident values, see the Min Marker Confidence Virtual Reality preference.

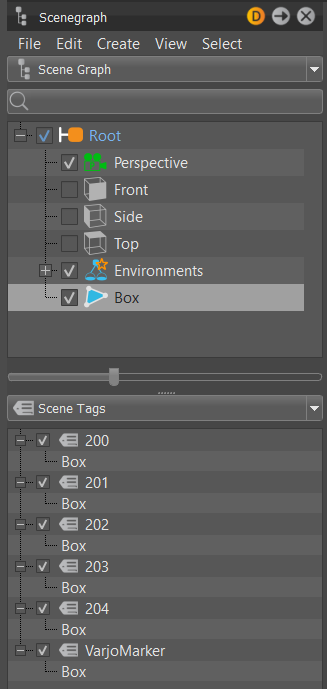

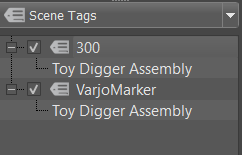

Preparing Nodes for Markers

Only available for Varjo XR HMD users.

Follow these steps to prepare your nodes before entering MR. Note that objects that won't fit in your room will need to be scaled down before entering MR.

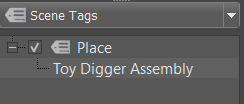

Add a tag and rename it

VarjoMarker.Drag and drop an object node onto this tag to assign it.

Create another tag and rename it to the number printed on the Varjo marker.

Drag and drop the same object node onto this tag.

Using Markers

Only available for Varjo XR HMD users.

Nodes from your scene must be tagged before continuing. If this has not been done, see Preparing Nodes for Markers before continuing.

Wearing a connected Varjo HMD that supports MR, in VRED, select View > Display > Varjo HMD. The objects that were tagged with the

VarjoMarkerand number tags appear in MR.Open the VR Menu with your controller and select Enable Markers.

The geometry jumps directly onto the marker.

Hands in MR

Currently, only Varjo XR HMDs are supported for MR.

In XR, VRED replaces the VR X-Ray material hands with your own.

In XR, VRED replaces the VR X-Ray material hands with your own.

To enter MR, select  (the MR feature) in the VR Menu.

(the MR feature) in the VR Menu.

Be aware, there are differences in the supported functionality for the XR-1 and XR-3 in MR.

- The XR-1 supports hand depth estimations and marker tracking.

- The XR-3 supports hand depth estimations, marker tracking, and hand tracking (gestures). This means hand gestures can be used for scene interaction in place of controllers. Since VRED is replacing the standard hands which support joints/bones with your real-world hands, you can use Python to connect hand trackers for scene interaction.

Connecting Hand Trackers with Python

To see how to connect hand tracking using Python, select File > Open Examples and navigate to the vr folder, where you'll find externalHandTracking.py. This provides an example for how to set hand tracking.

- For the left hand use

vrDeviceService.getLeftTrackedHand() - For the right hand use

vrDeviceService.getRightTrackedHand()

The returned object is of type vrdTrackedHand.

Hand Depth Estimation

Currently, only Varjo XR HMDs are supported for MR.

Detects your real-world hands in the mixed reality video and shows them in front of a virtual object, if they are closer than the object. When disabled, the rendering from VRED will always occlude the real hands, even if the hands are closer than the rendered object.

Detects your real-world hands in the mixed reality video and shows them in front of a virtual object, if they are closer than the object. When disabled, the rendering from VRED will always occlude the real hands, even if the hands are closer than the rendered object.

Find this option in the VR Menu. To set a default behavior for this option, visit the Virtual Reality preferences > HMD tab > Varjo section.

| Hand Depth Estimation OFF | Hand Depth Estimation ON |

|---|---|

|

|

Displaying Your Hands in Mixed Reality

Currently, only Varjo XR HMDs are supported for MR.

Use the Hand Depth Estimation tool to display your hands, while in mixed reality.

Wearing a connected Varjo HMD that supports MR, in VRED, select View > Display > Varjo HMD.

Press the Menu button on your controller to access the VR Menu.

Press

to see your real-world hands in MR.

to see your real-world hands in MR.

Hand Gestures

This section provides the supported VRED hand gestures for interacting with your digital designs. Your hand movement is tracked as you tap parts of a model with your index finger to initiate touch events, select tools from the VR Menu, or interact with HTML 5 HMI screens. Use these gestures to navigate scenes, without the need for controllers.

The virtual hands will automatically adjust to match your real-world ones.

Touch Interactions

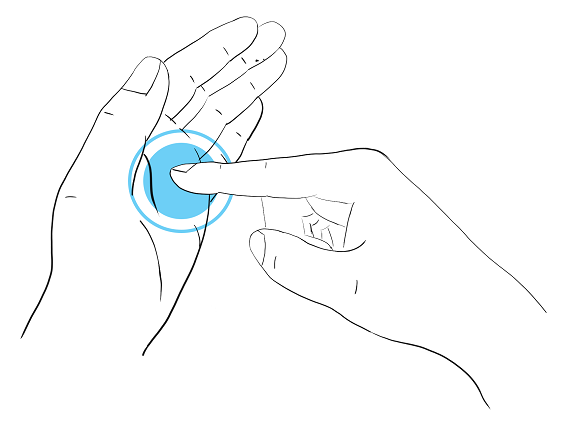

Use your index finger’s tip (on either hand) to touch and interact with scene content, such as the xR Home Menu, objects with touch sensors, or HTML 5 HMI screens.

xR VR Menu

To open and close the xR Home Menu, use the same gestures.

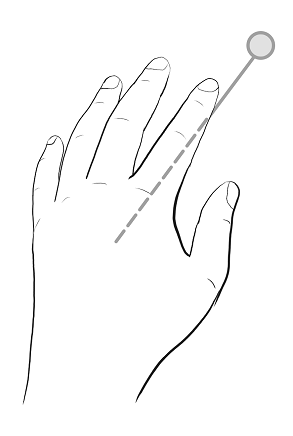

Move your index finger to the palm of your other hand. This opens the menu. Now you can use your index finger to select an option from the menu. Repeat the initial gesture to close the VR Menu.

For information on the VR Menu and its tools, see VR Menu.

If your hands are in front of a virtual object, but are occluded by it, enable Hand Depth Estimation.

Teleport

To teleport, you will need to initiate the teleporter, orient the arc and arrow, then execute a jump to the new location. When finished, terminate the teleporter.

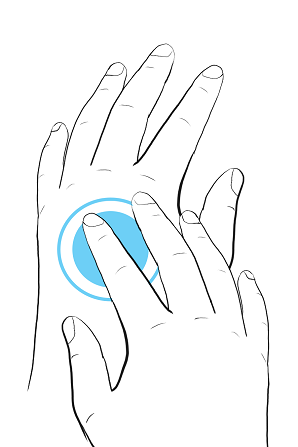

Initiating and Terminating Teleport

To initiate and terminate teleporting, use the same gestures.

Using one hand, tap the back of the other hand. This initiates the teleport from the tapped hand. Now orient the arc and teleport. Repeat this gesture to terminate the teleporter.

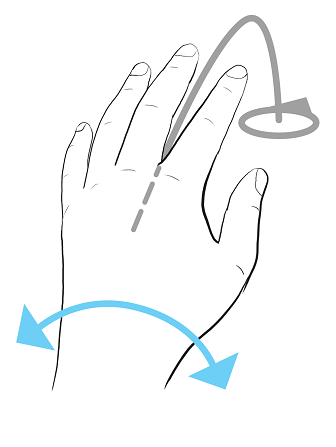

Teleport Orientation

Rotate your wrist, while the teleport arc is displayed, to orient the teleporter. Now teleport.

Teleporting

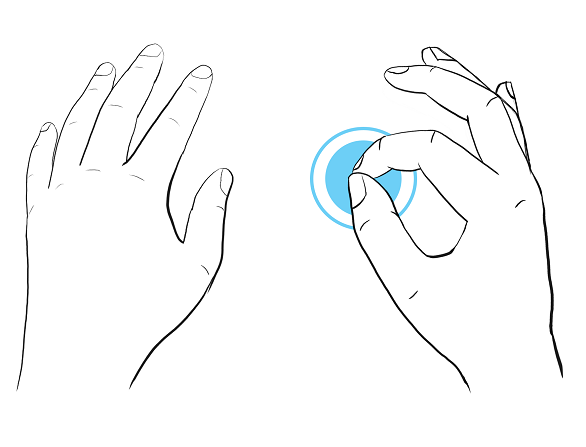

With the hand not currently displaying the teleport arc, pinch your index finger to thumb. This accepts the teleport arc location and orientation, executing the teleport. When finished, terminate the teleport to exit the tool.

For information on teleporting, see Teleporting.

Laser Pointer

To use the Laser Pointer, you will need to initiate, execute, then terminate it.

Initiating and Terminating the Laser Pointer

To initiate and terminate the Laser Pointer, use the same gestures.

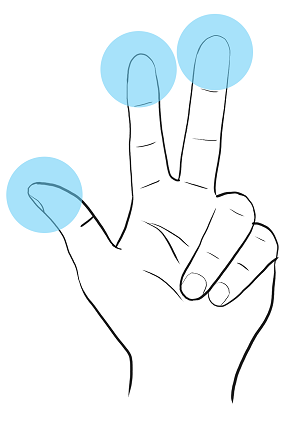

Point your thump, index, and middle fingers out with palm facing towards camera. Now, use the laser pointer to point to things or trigger interactions with scene content. Repeat this gesture to terminate the Laser Pointer.

Using the Laser Pointer

Use your index finger to point at scene content.

Pinch your index finger to thumb together to trigger interaction with scene content, such as selecting tools from the VR Menu, activating a touch sensor, or interacting with HTML 5 HMI screens. When finished, terminate the Laser Pointer to exit the tool.

Place Tool

Only available in MR mode.

When in a collaborative XR session, the position of objects are syncronized for users.

Use for placing objects on and moving them along the ground of the scene, using the laser pointer. These objects must have an assigned scene tag, called

Use for placing objects on and moving them along the ground of the scene, using the laser pointer. These objects must have an assigned scene tag, called Place.

The Place tool uses a similar approach to Teleport for rotating objects, that is rotating your wrist. You can snap the rotation to a 10° angle by pressing the controller's Grip button. Use this when trying to align one object with another. It is also possible to place objects atop one another.

Preparing Nodes for Placement

Only available in MR mode.

To pick and place objects in MR, you must first prepare the nodes. If objects are too large or small for your room, they will need to be scaled to an appropriate size before entering MR.

- Create a tag and rename it

Place. - In the Scenegraph, drag and drop an object node onto this tag.

Using Place

Only available in X mode.

Nodes from your scene must be tagged before continuing. If this has not been done, follow the instructions in Preparing Nodes for Placement.

Wearing a connected Varjo HMD that supports MR, in VRED, select View > Display > Varjo HMD. The objects that were tagged with the

Placeappear in MR.Open the VR Menu by pressing the menu button on your controller, then select

(Place).

(Place).

When highlighted orange, the tool is enabled. When gray, the tool is disabled. An indicator appears above your controller stating what is active and picked.

Point to an object and continually squeeze the trigger to pick and move it along the virtual ground.

Rotate your wrist to rotate the object around the up axis. This action is similar to that of the Teleport tool.

Tip:Squeeze the Grip button to snap to 10 degree angle increments. Use this to help with the alignment of your object to another.

Hand Tracking Preferences

Only available for Varjo XR-3 users.

We added interaction preferences to the Virtual Reality preferences for enabling hand tracking and setting translational and rotational hand offset for tracked hands.

Enabling hand tracking in the preferences automatically activates it for VR or MR.

Use Tracked Hands in VR

Only available for Varjo XR-3 users.

Sets the default to always track hands in VR when enabled. If Tracker is set to Varjo Integrated Ultraleap, hand tracking is also enabled in XR for supported HMDs.

Tracker

Only available for Varjo XR-3 users.

Sets the default system used for hand tracking.

- Varjo users need to enable Varjo Integrated Ultraleap.

- To use other hand tracking devices, select Custom.

Setting up Hand Tracking for Other Devices

For the Custom Tracker option, you must provide all the tracking data to VRED's Python interface. How to do this can vary from device to device; however, if you can access the tracking data via Python script, the data needs to be set into the

vrdTrackedHandobjects returned by the methods used. This requires the transformation data of the tracked hand and/or different finger joints (see theexternalHandTracking.pyexample file for how this works).For testing, set the corresponding preferences, load the script, and enter VR. You may have to modify the script by changing values for hand and/or joint transforms to understand how everything works.

Translation Offset

Set the default translational tracking offset from the hands. Use this to adjust any offset between the tracked hands and the hands rendered in VR.

Rotational Offset

Set the default rotational tracking offset from the hands. Use this to adjust any offset between the tracked hands and the hands rendered in VR.

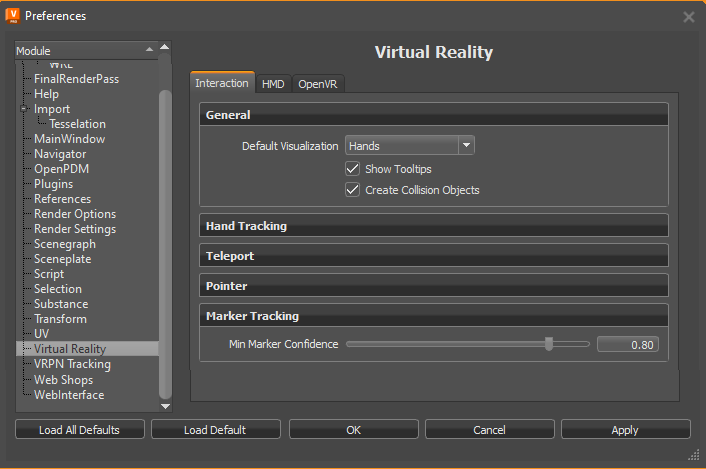

Marker Tracking

The marker tracking system detects each marker and assigned a confidence value to it, ranging from 0.0 to 1.0.

0.0 means the system deems the detected result (marker ID and position) 100% incorrect.

1.0 means the system deems the detected result (marker ID and position) 100% correct.

For example, a marker that gets a value of 0.9 means there is a 90% confidence in the correctness of the marker's position and IDs.

A marker with a low confidence value appears to be jumping around. Setting the minimal accepted confidence to a higher value makes the system ignore unreliable detected results.

A marker that was detected with high confidence value, but later is detected to have a confidence value lower than the minimum value will be ignored and its position will not be updated.

Min Marker Confidence

Sets the default minimum value used by the marker tracking system to determining if the detected marker position is correct.

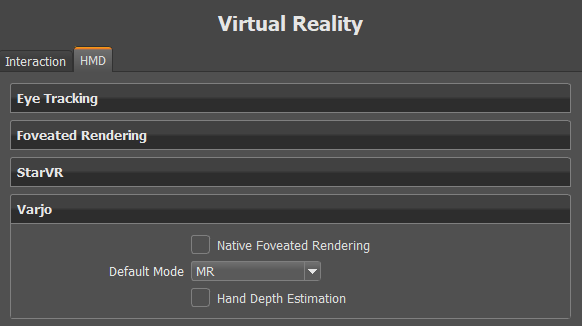

Varjo Preferences

We added Varjo HMD-specific preferences to the HMD tab for settings the default native foveated rendering state, the mode you enter, and hand depth.

Native Foveated Rendering

Requires Eye Tracking to be enabled.

In the HMD tab > Varjo section, use to set the default state for how things in the periphery are rendered. When enabled, peripheral resolution (image quality) is reduced; however, areas tracked by your eye are still rendered at high resolution. This improves performance in scenes with compute intensive materials, and when using real-time antialiasing. For more information on foveated rendering and the different settings, see Custom Quality.

Default Mode

In the HMD tab > Varjo section, use to set the default viewing mode for a Varjo HMD. If you always work in mixed reality, set this to MR.

Hand Depth Estimation Preference

In the HMD tab > Varjo section, use to set the default state for real-world hands in MR. When enabled, it detects your real-world hands in the mixed reality video and shows them in front of a virtual object, if they are closer than the object. When disabled, the rendering from VRED will always occlude the real hands, even if the hands are closer than the rendered object.

| Hand Depth Estimation OFF | Hand Depth Estimation ON |

|---|---|

|

|

Python for Markers

These are the functions for the Python interface for implementing a custom version of marker tracking. They can be found in the vrImmersiveInteractionService documentation.

createMultiMarker

vrImmersiveInteractionService.createMultiMarker(multiMarkerName, markerNames, markerType)

Creates a multi marker by averaging the pose of multiple regular markers.

Parameters

- multiMarkerName (string) – The name the multi marker will have. Can be chosen freely

- markerNames (List[string]) – The names of the marker the multi marker will consist of

- markerType (vrXRealityTypes.MarkerTypes) – The type of the markers the multi marker will consist of

Returns

Return type

vrdMultiMarker

getDetectedMarkers

vrImmersiveInteractionService.getDetectedMarkers(markerType)

Gets all detected markers of a given type.

Parameters

- markerType (vrXRealityTypes.MarkerTypes) – The type of the marker

Returns

The detected markers

Return type

List[vrdMarker]

getMarker

vrImmersiveInteractionService.getMarker(name, markerType)

Gets a marker that has already been detected.

Parameters

- name (string) – The name of the marker

- markerType (vrXRealityTypes.MarkerTypes) – The type of the marker

Returns

The marker or a null marker if the name and type combination is undetected

Return type

vrdMarker

getMinMarkerConfidence

vrImmersiveInteractionService.getMinMarkerConfidence()

See also: setMinMarkerConfidence.

Returns

The minimum marker confidence

Return type

float

setMinMarkerConfidence

vrImmersiveInteractionService.setMinMarkerConfidence(confidence)

Sets the minimum marker confidence. When markers are detected with a lower confidence they will be ignored. Markers that are already known to the system will not be updated, if the updated data has a lower confidence.

Parameters

- confidence (float) – The minimum confidence

markersDetected

vrImmersiveInteractionService.markersDetected(markers)

This signal is triggered when new markers are detected that have a confidence that is equal or higher than minimum marker confidence.

Parameters

- markers (List[vrdMarker]) – The detected markers

markersUpdated

vrImmersiveInteractionService.markersUpdated(markers)

This signal is triggered when new markers are detected that have a confidence that is equal or higher than minimum marker confidence.

Parameters

- markers (List[vrdMarker]) – The detected markers