Use Action's Analyzer to compute the path of live-action camera and object motion in 3D space. Using the calculated position and motion of the virtual camera, you can match image sequences perfectly, placing any element in the scene. The perspective of the element you place in the scene changes with the perspective of the background as the camera moves. The virtual camera motion is intended to be identical to the motion of the actual camera that shot the scene.

Use the following workflow as a quick start guide to the Analyzer. Follow the links for more detailed information.

Step 1

Select back or front/matte media (mono or stereo) to analyze and add an Analyzer node.

Optional steps:

- Perform a lens correction.

- Adjust the four corners of the perspective grid to set the focal length.

- Add manual trackers to obtain a more predictable and consistent result.

Tracking using the Analyzer Node

Step 2

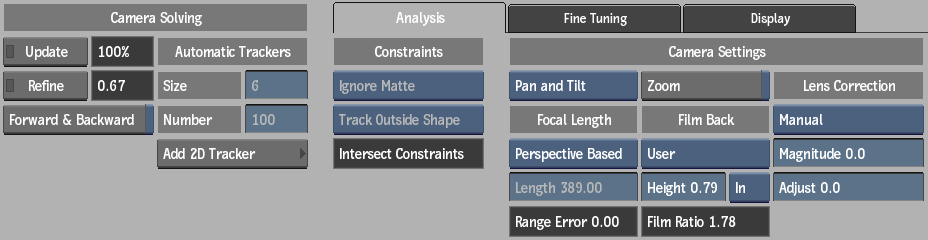

Perform Camera Tracking in the Analyzer menu.

Optional steps:

- Add mask constraints to moving areas or areas not wanted in the analysis.

- Add properties of the camera that shot the footage to be analyzed.

Step 3

Fine-Tune and recalibrate or refine the camera tracking analysis. This step is optional depending on the results of your initial analysis.

Step 4

Create a Point Cloud of selected points after the analysis.

Step 5

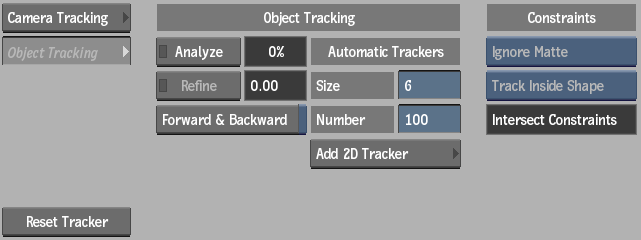

Perform Object Tracking. If needed, after camera tracking, you can track moving objects in the scene — such as inside the masks that were not tracked in the camera tracking analysis.