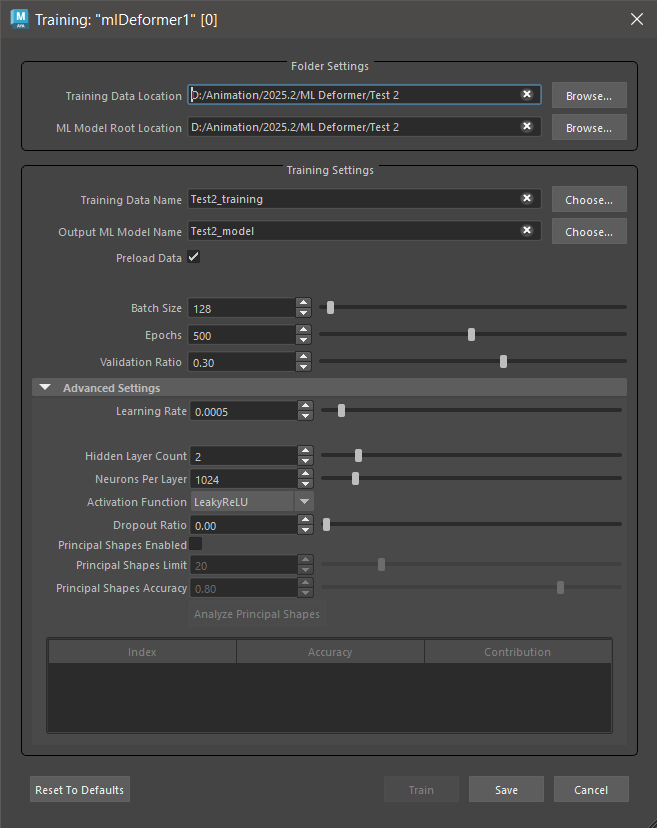

The ML Deformer Training settings is where you configure how the Machine Learning model should be trained on the Training Data. See ML Deformer Export Training Data window for details on how to export the training data.

To open the ML Deformer Training settings

- In the

ML Deformer Attributes tab, click the

Train Model…

icon.

icon.

- In the ML Deformer Attributes tab, right-click the ML Model column and choose Train Model….

Folder Settings

This section lets you set the path to your exported training data and trained models.

- Training Data Location

-

Shows the path to the exported training data that you will train your model on. See ML Deformer Export Training Data window for details on how to export training data. Click Browse to navigate to a folder where you can load your training data.Note: This can be a temporary directory as the training data is not needed once the model is trained, unless you want to retrain it with the same data.

- ML Model Root Location

- Shows the directory where trained ML models are saved, which is inside the project folder by default. ML models are required for the ML deformer to function, so they should typically be kept in the same project as the scene to facilitate easier sharing.

- Click Browse to navigate to a folder where you want to store your ML Model.

Training Settings

This section lets you define the training data used to teach the ML Deformer.

- Training Data Name

- Specifies the name of the training data set to use to train the model. This should match the name specified when exporting the training data in the ML Deformer Export Training Data window.

- Output ML Model Name

- The name of the folder that will be created to hold the trained ML model file and its associated metadata. This folder will be given the suffix ".mldf". For example, exporting with the name "test" will create a folder called test.mldf in the ML Model Root Location folder in the Training Data Name directory.

- Preload Data

- Activate Preload Data to load all of the training data into memory, instead of loading it in batches, during the training process. Enabling Preload Data is recommended if your training data set is able to fit entirely in memory, as this makes the training process significantly faster. If training on GPU, the speed difference can be even more significant.

- Learn Surface

- Activate

Learn Surface to train the deformer with additional vertex frames, rather than having the model guess the deltas and the surface. This can improve noisy results. To use it, make sure that

Delta Mode is set to

Surface and

Export Surface Information is enabled in the

Export Training Data settings.

Note: This setting causes the model to become rotationally dependent during training since it depends on the space the mesh is in.

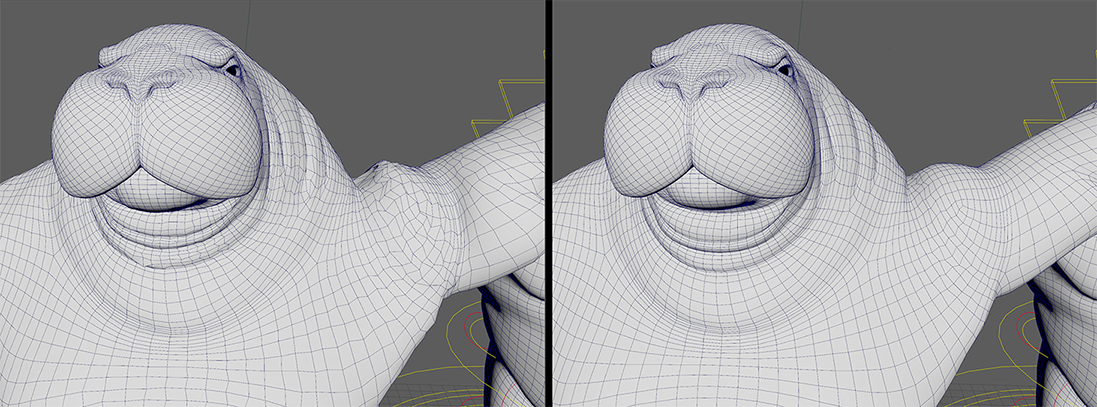

Learn Surface: Off vs Learn Surface: On

- Batch Size

- Specify a size for the "batches" that the data set is divided into. The batches are loaded into memory together, so you need enough RAM (and VRAM, if using the GPU) to support the specified batch size.

- Epochs

- Lets you set how many times the training processes the complete data set. The number of Epochs impacts how long it takes to train the model.

- Experiment with smaller epoch counts (< 100) before increasing them if you are not happy with the result. Using more epochs for training lets the model learn more from the data, but beware of "overfitting".

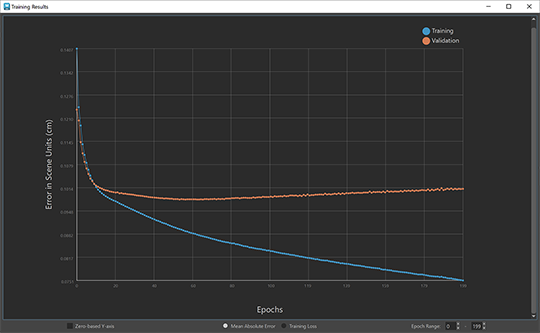

- Overfitting occurs when the model becomes too adept at matching the specific data it's been given and is unable to generalize to new control values. See the

ML Deformer

Training Results window to analyze the overfitting deltas in your training data.

Overfitting example: Blue line represents Training and Orange represents Validation

- To check for overfitting, compare the training and validation loss: if the validation error is larger than the training error, then this can be a sign of the model overfitting to the training data.(see the example above).

- Validation Ratio

- Specify the percentage of the training data samples that should be set aside for validation. The validation set is a random sampling of the training data that will not be used to train. It provides a useful indication of how well the model performs on data it hasn't seen, and can also be used to check for overfitting.

Advanced Settings

The Advanced ML Deformer training settings give you further ways to control the approximation. It is a good idea to stay with the default settings, and only adjust the Advanced Settings when you have a specific need.

- Learning Rate

- Configure how large a learning "step" the model must take per batch to adjust itself based on the results of that batch. Smaller values require more epochs to train, and can become overloaded, while larger values are more chaotic and may not produce a good approximation.

- Hidden Layer Count

- The number of layers in the neural network, not including the input and output layers. Currently, all the settings are applied uniformly to each hidden layer.

- Specifying more layers help the model approximate complex relationships between the controls and the resulting deformed geometry, but make the model slower to run and/or train, which can create inconsistences.

- Neurons per layer

- The number of artificial neurons that should exist in each layer of the model.

- These neurons take a set of input values from the layer above and produce an output value. Increasing the number of neurons lets the model learn a larger variety of deformations. However, increasing the number of neurons also increases the size of the model and makes it slower to run and/or train (especially for high resolution meshes), and contributes to overfitting.

- In general, deep neural networks are more efficient than very wide ones.

- Activation Function

-

Choose the function to apply to the sum of a neuron's inputs to generate an output. These functions introduce non-linearity to the output which allows the model to learn nonlinear relationships between the inputs and outputs.

- Sigmoid

- An S-shaped curve function.

- See Sigmoid from the PyTorch documentation for a description.

- TanH

- A Hyperbolic tangent function.

- See TanH from the PyTorch documentation for a description.

- Leaky ReLU

- Similar to ReLU, except instead of all negative values being 0, they have a small slope, for example: f(x) = 0.01 * x.

- This prevents the case of neurons dying due to becoming stuck on negative values.

- See LeakyReLU from the PyTorch documentation for a description.

- ReLU (Rectified Linear Unit)

- A simple piecewise function where f(x) = x if x is positive and f(x) = 0 if x is negative.

- Because of its simplicity, this function returns on a good result quicker than other functions. However, because there is no slope between negative values, sometimes the inputs become stuck in negative values and untrainable.

- See ReLU from the PyTorch documentation for a description.

- Linear

- Linear is the most basic activation function: f(x) = x. A network composed solely of linear activations is unable to learn nonlinear deformations, so best used only in circumstances where that is desired or when paired with other activation functions.

- See Linear from the PyTorch documentation for a description.

- Dropout Ratio

- Use the Dropout Ratio to help prevent overfitting. When using Dropout Ratio, a fraction of the inputs set by the ratio, for each layer, is set to zero during training. Setting the inputs to zero causes the model to learn redundant connections to become more robust.

- However, this method can have undesirable results such as linking controls to outputs when they should not impact it. For example, moving the wrist joint causing deformation in the shoulder.

- Reset to Defaults

- Restore the ML Deformer Training settings default options.

- Train

- Begins the training process for the ML Deformer.

- Save

- Saves the settings in the ML Deformer Training settings so you can use them again. To reuse previous ML Deformer Training Settings click Choose in the Training Data Name and Output ML Model Name fields.

- Cancel

- Close the ML Deformer Training settings.

Principle Shape Settings

- Principal Shapes

-

Activate Principal Shapes so the ML Deformer computes a series of base delta poses for the Target deformations (relative to the rest pose), instead of evaluating the deltas (or differences) between the base and target vertices for each pose. For steps on how to use Principal Shapes, see Create ML Deformer training data using Principal Shapes.

Principal Shapes are similar to blend shapes: to create the final result, they have associated weights that are blended together. When Principal Shapes are added to the base, they recreate the target deformation. That way, rather than training to approximate deltas, the ML Deformer uses the Principal Shapes to map the control values to these weights.

Using Principal Shapes is useful because the number of weights to learn are smaller than the number of deltas, making the resulting ML model quicker to learn, and evaluate. (You may need to reduce the neurons per layer to avoid overfitting.)

Principal Shapes are calculated from training poses using singular value decomposition.Note: Make sure that you have enough RAM to hold the training data set as all training poses are loaded into memory to perform this calculation. - Shape Construction

- The manner in which the deformer learns to reproduce weights from principal shapes.

- Fixed principal shapes

- Forces the model to reproduce weights based on learnings from SVD analysis.

- Tune principal shapes during training

- Parameterizes the principal shapes, allowing the model to adjust them during training. This method takes longer and requires more memory, but can potentially improve results (especially if not all the training poses fit the SVD analysis). This is the default.

- Principal Shapes Limit

- The maximum number of Principal Shapes to generate. This value acts as a hard limit, so the computation uses only up to this many shapes even if they do not reach the desired accuracy. An error message appears if there are more samples than deltas in the training data.

- Principal Shapes Accuracy

- The level of accuracy that the combination of principal shapes should achieve across the sample poses. The

ML Deformer then generates the number of Principal Shapes required to recreate the target deformations to meet this level of accuracy.

Note: This is the accuracy using perfect weight values, so the result from the trained model will be less accurate.

- Shape Analysis

- The manner in which handle principal shapes when all the training poses don't fit into memory.

- Attempt to use all poses

- Attempt to use all the poses in the order they were added and display an error if the memory limit is reached.

- Truncate poses to memory limit

- Attempt to use all the poses in the order they were added until the memory limit is reached (truncating the rest). This will cause some data loss.

- Randomly select poses to memory limit

- Use poses in a random order until the memory limit is reached (truncating the rest). This will cause some data loss, but will give a relatively well-rounded representation of the data set.