Transferring complex deformations from a target model to a mesh using the ML Deformer

The ML Deformer reads complex deformation systems and learns to represent and approximate them in a lighter, more portable, way by training and then making use of a machine learning model.

The ML Deformer works by learning from an animation sequence that provides a wide range of motion of the target geometry. This animation sequence can consist of motion capture data, existing keyframed animations, randomization through ML Deformer's Pose Generation and Principal Shapes functionality, or a combination of all techniques. This sample data, along with the values of driver controls that influence the pose on each frame, are used to train the ML model.

Once trained, the original deformations are approximated much more efficiently, allowing for improved interactivity when animating, or faster renders when used in crowd scenes. You can toggle between the ML Deformer and the original complex source deformer to use the approximation during the animating process, with the complex deformer being used during final rendering for best results.

Sample ML Deformer animation in the Content Browser

- Depending on the rig, setting the Delta Mode to Surface may produce artifacts and incorrect jagged deformations in some cases. This happens when the surface vertex frames aren't calculated consistently, often due to overlapping vertices in certain poses. It's possible the results can be improved if the bad poses are removed from the training set. However, the ML Deformer will still perform poorly on those and similar poses after training.

- When trained across a large number of controls, the ML model tends to learn incorrect associations between controls and deformations in unrelated parts of the mesh. Training on poses that trigger fewer controls at once can help with this issue.

Basic Workflow

- Add an ML Deformer to the geometry you want to deform

- Rearrange the ML Deformer in the deformation stack ahead of the deformers to approximate

- Create a target based on those deformers.

See Create an ML Deformer for step-by-step instructions.

You can also use a Source and Target object to with the ML Deformer to approximate complex deformation:

- Add and assign an ML Deformer to a Source object.

- Configure and assign driver controls to influence the poses and the corresponding complex/original deformations.

- Use existing animations or generate random poses and export this as training data for the model to learn from. See ML Deformer Export Training Data window.

- Configure training parameters and train the model. See ML Deformer Training Settings.

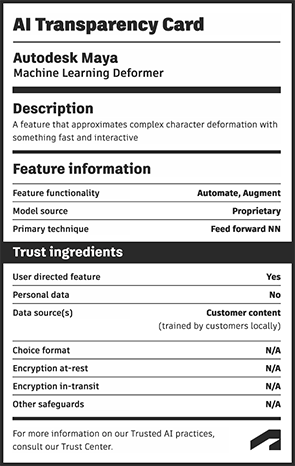

AI Transparency

At Autodesk, we're dedicated to trustworthy AI innovation. Click on the image to learn more.

See Create an ML Deformer using separate Target geometry for step-by-step instructions.