Inference

Use the Inference node to apply effects to your clips using a trained ONNX machine learning model.

| Access | To access the Inference menu, use:

|

| Input | Like Matchbox, the Inference node has a variable amount of inputs. The number of inputs must correspond to the amount of inputs required by the model. There are four types of inputs:

|

| Outputs | The amount of outputs is variable. There are two types of outputs:

|

File Format

The Inference node only works with ONNX models. For more information about ONNX models, visit the following websites:

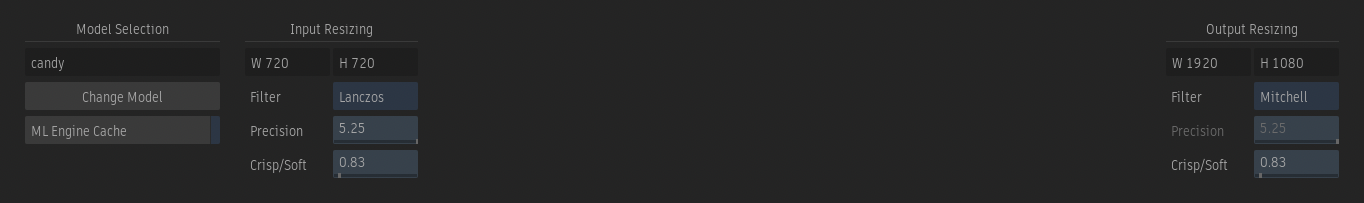

Model Selection

The Load Model file browser is opened when an Inference node is added to a Batch, Batch FX, or Modular Keyer Schematic view. There are no models provided by default with Flame Family products, so you must select an ONNX model you either created or downloaded. Two types of file can be loaded: .onnx and .inf files.

When you select an .onnx file, the software looks for a corresponding .json file with the same name in the directory. That JSON file defines the inputs, outputs, and attributes for a model. This is the equivalent of the XML file for a Matchbox shader. If no associated JSON sidecar file is present in the same directory, the application applies the default inputs, outputs, and attributes to the node.

An .inf file is a package containing the ONNX model and its associated JSON and thumbnail files. This package can be created using the inference_builder command line available in /opt/Autodesk/<application version>/bin. This creates an encrypted .inf file only a Flame Family product can read, like a Matchbox .mx file.

For more information on how to create an .inf file, see the Inference Builder page.

Model Initialisation

When a model is loaded, the inference is automatically applied to the image. This process can take several minutes, particularly if a cache file for this model does not already exists. The initialisation of an inference can be aborted while the Preparing dialog window is displayed. It is not possible to abort the initialisation once the Preparation step has completed and the application is caching or loading a model.

Settings

The Inference node doesn't offer any controls over the models themselves. The only controls offered are the possibility to cache the model (Linux only) and which filters to use on input and output if clips must be resized to the image size required by the model.

Rendering Platform

On Rocky Linux, an inference can be run using either the CPU or GPU. On macOS, the inference is always run on the CPU.

Running the inference is faster on the GPU, but larger frames that cannot be rendered using it can be rendered using the CPU.

Bit Depth Support (Linux Only)

On Rocky Linux, when using the GPU rendering platform, the choice to run a model in 16-bit fp or 32-bit fp is offered. In previous versions, models were only run at 32-bit fp.

Selecting 16-bit fp offers the following advantages:

- It allows running a model on higher resolution images, since running in 16-bit fp requires less memory.

- It provides better performance.

Caching (Linux Only)

Use the ML Engine Cache button to enable NVIDIA's TensorRT cache. This option is disabled by default as the generation of the tensorRT engine can take several minutes. Because the cache requires a new tensorRT engine for each combination of ONNX model, GPU, and image size, a new engine is generated each time a new model is applied on a new combination. Each engine is stored in a cache in /opt/Autodesk/cache/tensorgraph/models and is used the next time the same ONNX model, GPU, and image size combination is required, making it much faster to use the node.

There is no caching mechanism available on macOS.

Resizing

When a model requires an image of a fixed resolution, an automatic resize takes place before and after the inference. The fixed width and height values are displayed in the Input Resizing section and resizing filter parameters are available to customize the operations for both the input and output resizing.

The KeepAspectRatio token of the JSON sidecar file can be used to preserve the input clip's aspect ratio through the resizing process if the model is sensitive to aspect ratio. The default token value is false to preserve as much precision as possible.

Hiding the Inference node

The Inference node can be hidden from the software using the DL_DISABLE_INFERENCE_NODE environment variable. This is targeted towards larger studios that would like to prevent the usage of the node in their pipeline.

When the node is hidden, it does not appear in the Node bins and cannot be added using the Search widget. If an existing setup containing an Inference node is loaded in a software running with the variable, the node appears as greyed out in the Schematic view and does not output media. Moreover, the setup cannot be rendered or sent to Burn and Background Reactor.